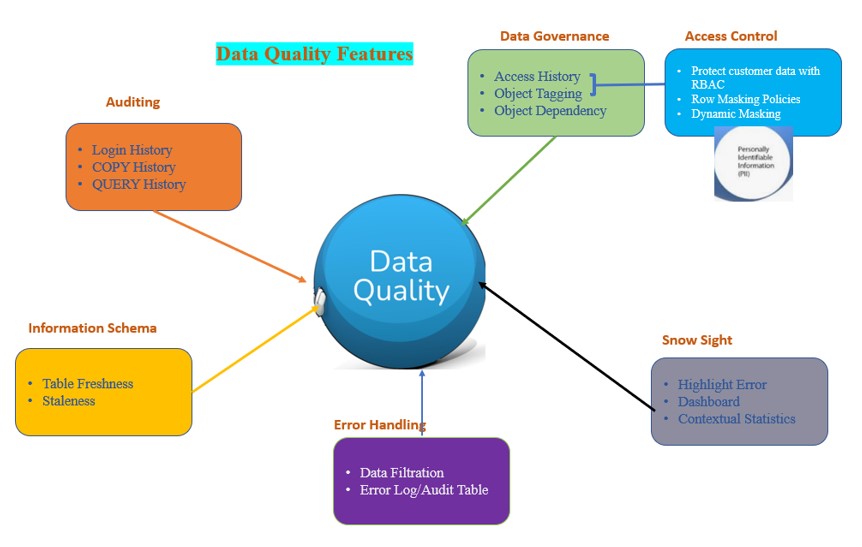

During the last post we discussed about Data Governance, a feature of Data Quality. In continuation of the same we will talk about the other features i.e. Auditing, Error Handling, Snowsight, Information Schema to identify Stale tables.

Auditing:

- LOGIN_HISTORY: Comprehensive Audit Trail for all the activity by all users from LOGIN. It stores for 365 days in customer tamper proof area of account. This area includes session, login attempt, and queries details. Data Stewards are now able to log and alert on who accesses the data. They also identify the users who are trying to login into the system in unauthorized way. The ability to track the movement of all data and users through its entire life cycle provides protection to the business and their customers.

SELECT EVENT_TIMESTAMP, EVENT_ID, EVENT_TYPE, USER_NAME, CLIENT_IP, REPORTED_CLIENT_TYPE, REPORTED_CLIENT_VERSION, FIRST_AUTHENTICATION_FACTOR, SECOND_AUTHENTICATION_FACTOR, IS_SUCCESS, ERROR_CODE, ERROR_MESSAGE, RELATED_EVENT_ID FROM SNOWFLAKE.ACCOUNT_USAGE.LOGIN_HISTORY;

- QUERY_HISTORY: To identify the queries run by user in Snowflake. Also helps to determine long runner queries or faulty queries. After the analysis we can correct or remove the erroneous queries from the system.

- COPY_HISTORY: The view displays load activity for both COPY INTO <table> statements and continuous data loading using Snowpipe.

Note: Though this data is retained for 365 days, in case of retaining the older 1 year data we have other customized ways and will share the approach in other post.

Stale tables:

Information Schema to identify Stale tables: Consider the scenario where the storage cost jumped up suddenly in last couple of months. Even though End users are accessing the limited number of tables and they are aware about the transactions on these tables. Possible root cause of these high cost is there are certain huge tables which are not using by end users since long time. These tables are not providing any benefit and on other hand contributing to the storage cost. We can use information_schema to identify the stale tables and drop unused tables to save money on storage costs.

Below query is use to identify all stale tables which are no longer access in past 30 days. We can modify this criterion based on the business requirement. Once the Stale tables are identifying, we can set the data retention to 0 and drop these tables. This way we will save storage and Time travel/Failsafe cost

SELECT TABLE_CATALOG,TABLE_SCHEMA,TABLE_NAME,ROW_COUNT,BYTES,

CREATED,LAST_ALTERED FROM DEMO_DB.information_schema.tables

where LAST_ALTERED < dateadd('day', -30, current_date())

ORDER BY TABLE_CATALOG, TABLE_SCHEMA, TABLE_NAME;

Error Handle:

Error Handling: Develop Error handling framework, to handle the Erroneous records while copying the data from one table to another in snowflake. Consider the scenario where we are copying the data from one of our staging tables to the target table. In case if any error occurs during the copy operation, it should be log into the Audit or Error Log While the successful records should be copy to the Target table. So in short, Instead of process getting fail in case of any error, process should continue while logging the error into Error log table. Hence this process handles the Data quality more efficiently.

Refer: https://cloudyard.in/2021/10/snowflake-error-handling-framework/

Snowsight: Data Visualization. Snow sight helps you to visualize data in the form of a chart, diagram, picture, etc. It helps sets up a clear framework and helps to understand the data better to measure its impact on the business.

- Highlight Error: Snowsight helps to identify the error in query by highlighting the faulty code or line.

- Dashboard: Able to generate the chart in snowflake itself without integrating the external BI tools.

- Automatic Contextual Statistics: Snowflake automatically calculates these statistics and helps you to understand data better.

- Autocomplete: which helps complete the query for you to minimize mistakes.

Therefore, These are some of the Data quality features provided by Snowflake and leveraging these in our process will definitely reduce the risk of data corruption.

one of best website for snowflake developers learning many things