Read Time:1 Minute, 52 Second

Copy AWS S3 to Gen2 to Snowflake: During the previous post we have migrated data from S3 to ADLS Gen2.Feed file from S3 are now available in Azure Storage. To continue our use case, we will load data from Gen2 to Snowflake. To start with

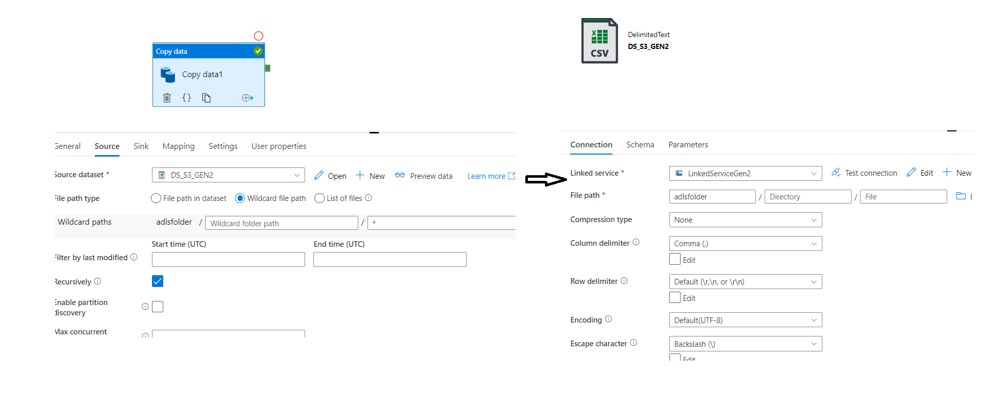

- We will use existing Gen2 Linked Services i.e. LinkedServiceGen2 (created in last post) to read data from Azure.

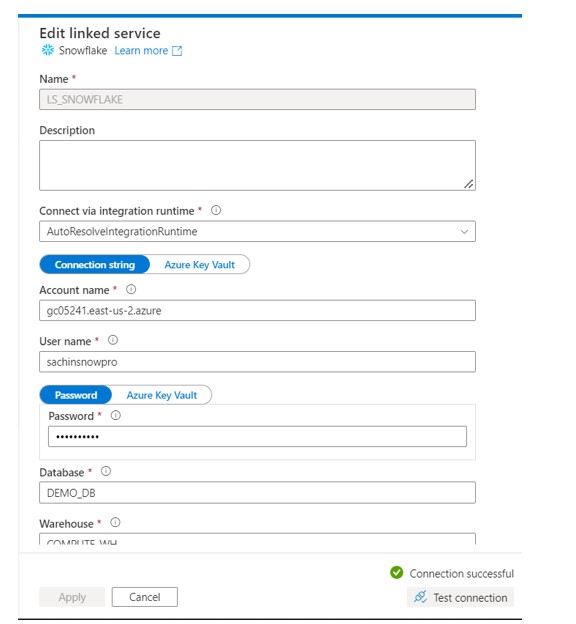

- Create the new Linked services pointing to the Snowflake.

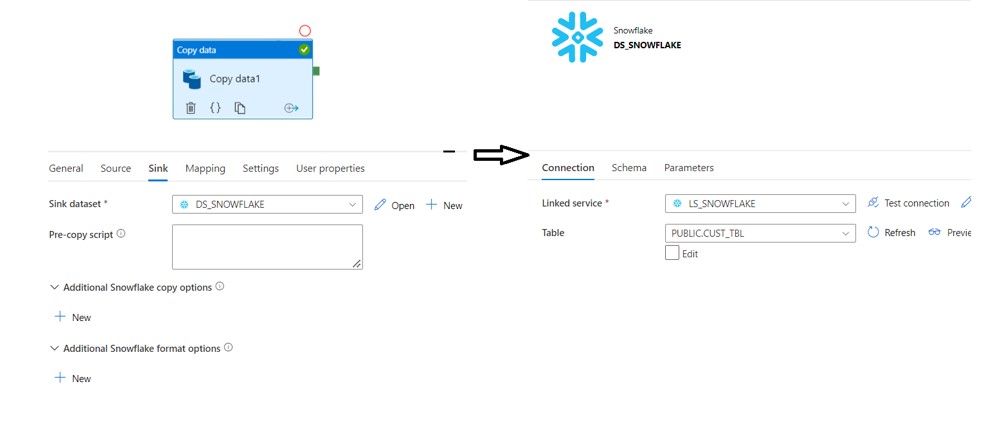

- Create the Pipeline with source and Target in below way:

- Source pointing to the LinkedServiceGen2

- Target pointing to the snowflake

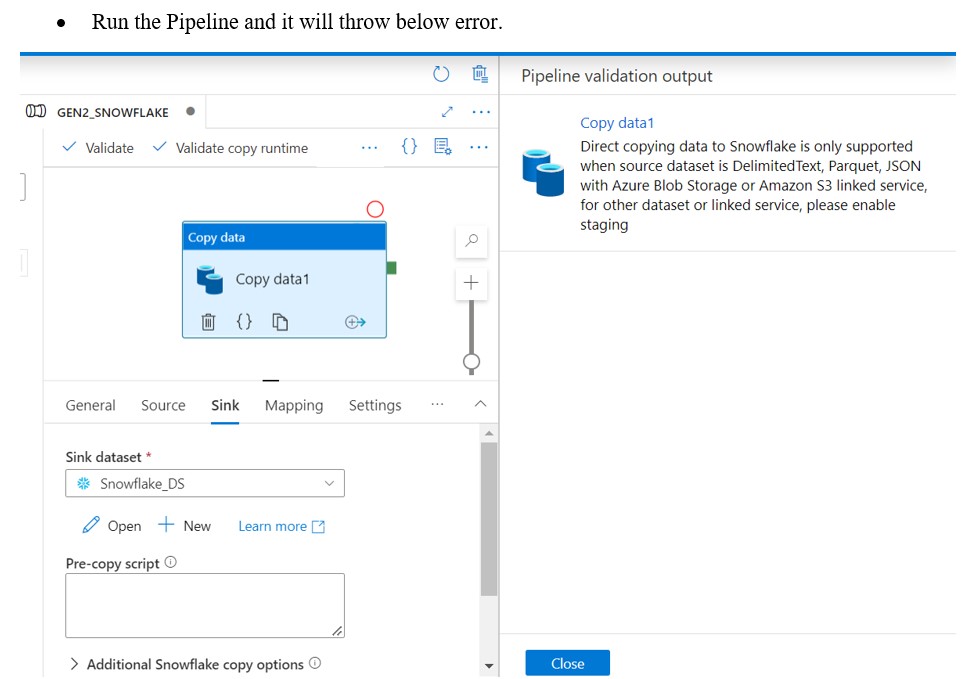

Reason:

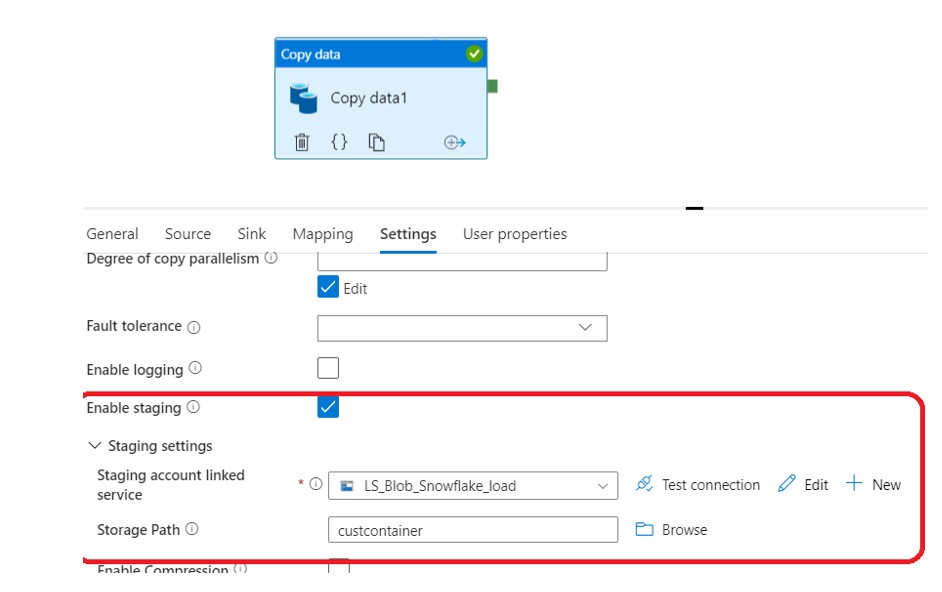

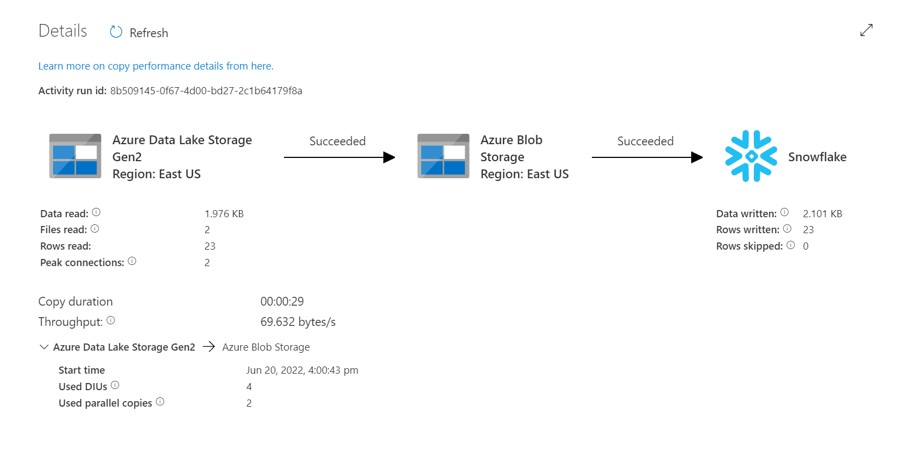

Azure Data Factory while copying data from Azure Data Lake Gen 2 to Snowflake use a storage account as stage. If the stage is not configured we get this error in Data Factory even when source is a csv file in Azure data lake. So to resolve the issue we need to create a Staging Area points to the Blob Area. So first data would load into Blob as temporary and final ingest to snowflake.

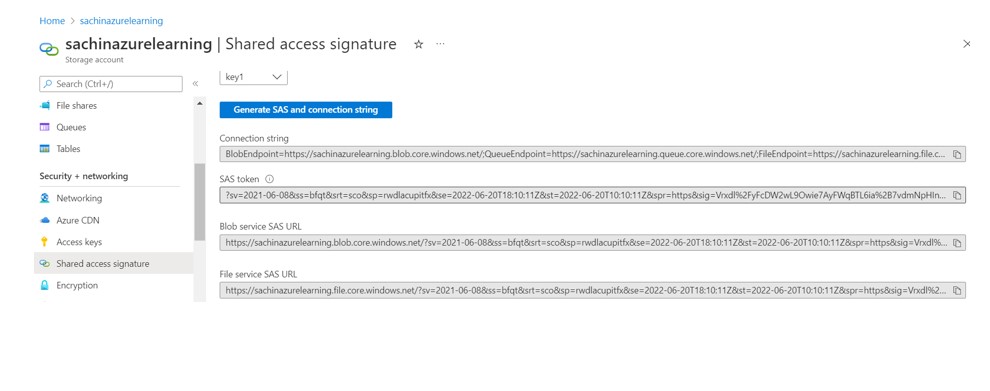

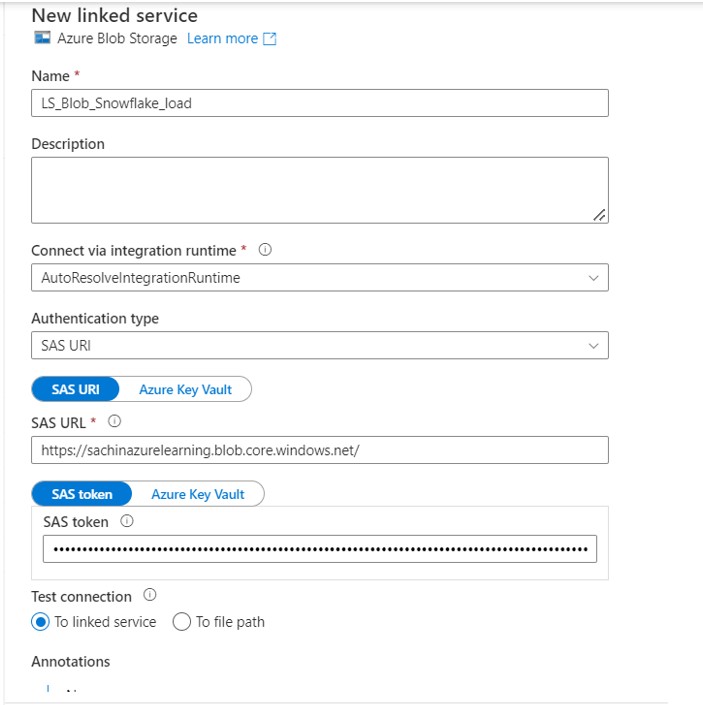

- Create the Linked services pointing to the Blob using SAS URI.

- Generate and Copy the SAS Token

- Copy the SAS URL as well

- Now Modify the Pipeline in below way.

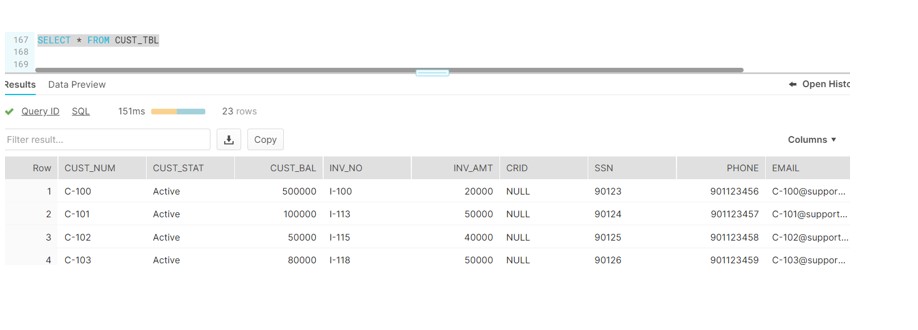

- Verify the table in Snowflake

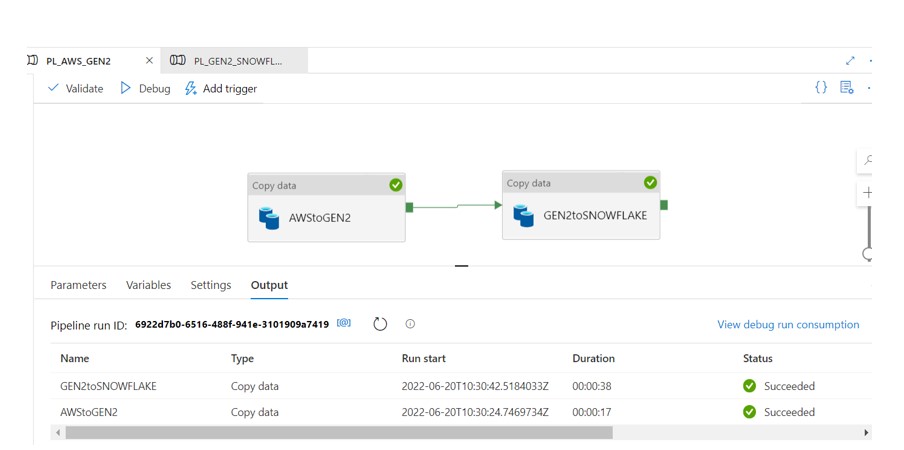

- Run the complete Pipeline

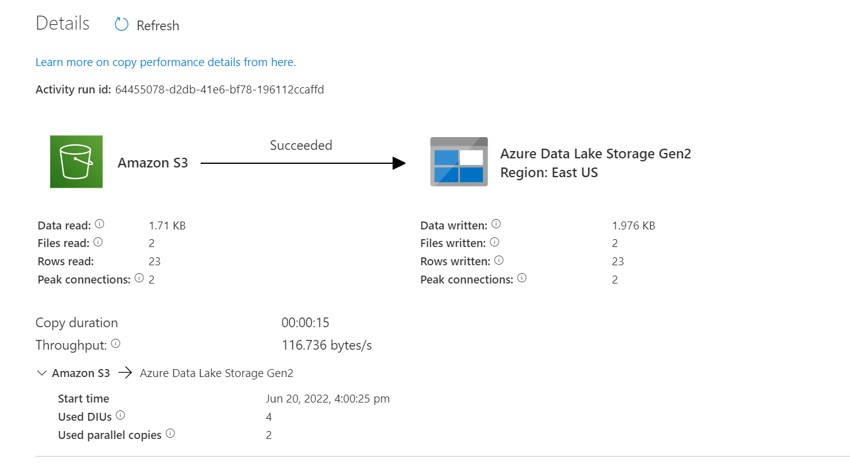

- Verify the output and log

- Verify data in Snowflake

Hence,We have concluded the complete pipeline i.e. Copy AWS S3 to Gen2 to Snowflake.

Happy

0

0 %

Sad

0

0 %

Excited

0

0 %

Sleepy

0

0 %

Angry

0

0 %