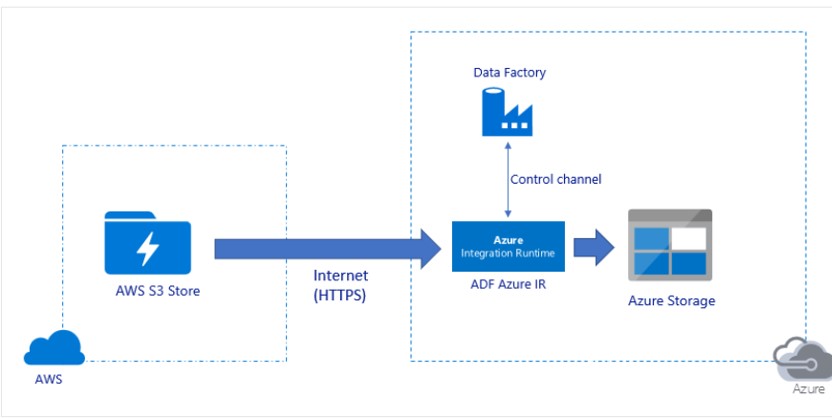

During this two part series we will discuss an interesting use case. We will try to Copy the data from AWS S3 to ADLS Gen2. Once the data in ADLS ingest the data into Snowflake. Now a days Multicloud architectures have become popular for enterprise applications. enterprise leverages multiple public cloud services i.e. from different cloud providers. Companies adopting AWS/AZURE as storage, at the later stage, may want to switch between service providers. Due to costing, contract renewal or single cloud dependency, Organizations wants to perform a migration from AWS to Azure or Vice versa. Though we are not deep diving into Multicloud features but will consider a Data transfer user case across AWS and AZURE cloud.

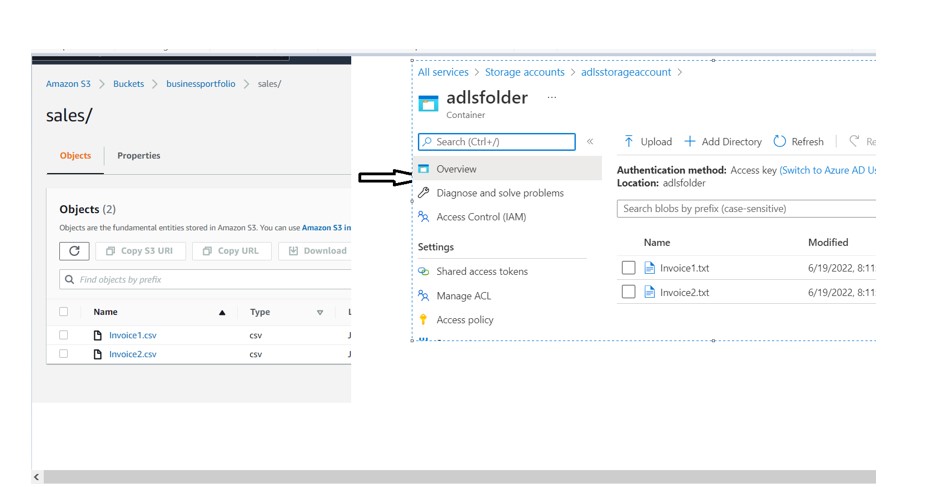

As part of this usecase we want to migrate the data from AWS bucket to Azure Storage. Currently in AWS we have bucket holding feed files. During migration, we want these files to be copied on Azure ADLS Gen2. For our scenario we would be using ADF to migrate data from S3 to Azure Storage.

Azure Data Factory provides a performant, robust, and cost-effective mechanism to migrate data at scale from Amazon S3 to Azure Blob Storage or Azure Data Lake Storage Gen2. Within a single copy activity run, ADF has built-in retry mechanism so it can handle a certain level of transient failures in the data stores or in the underlying network.

Technical implementation:

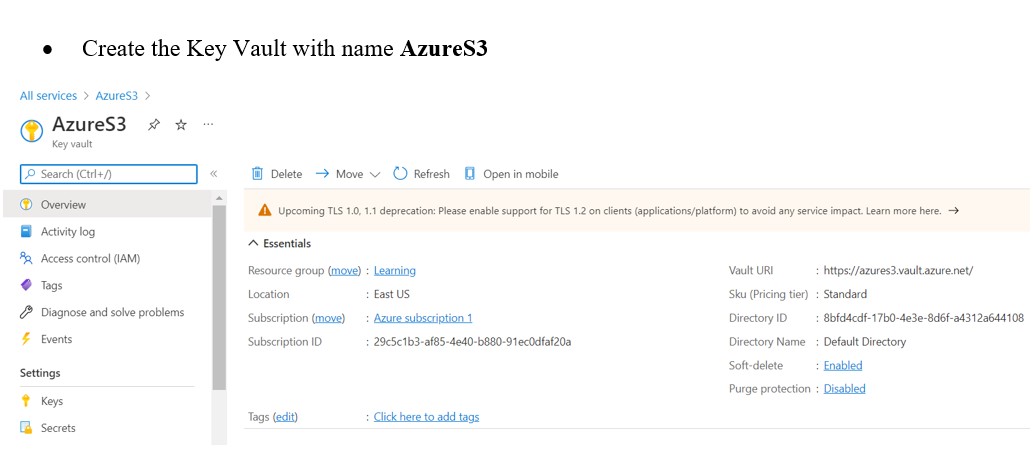

We will be using Azure Key vault to store the key credentials while copying the data from S3 to Azure.

Technical Implementation:

Following steps needs to be performed.

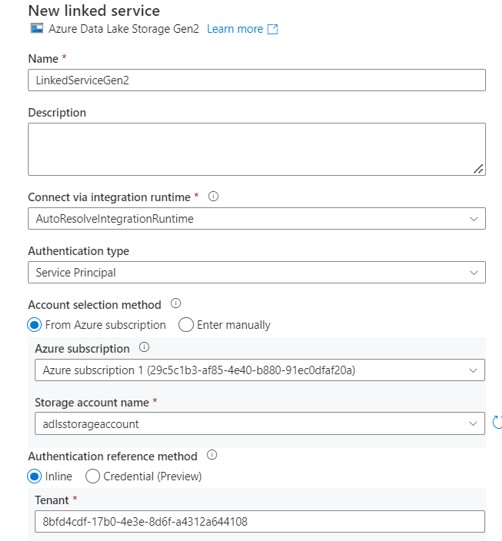

- Create the Gen2 storage Account(adlsstorageaccount).

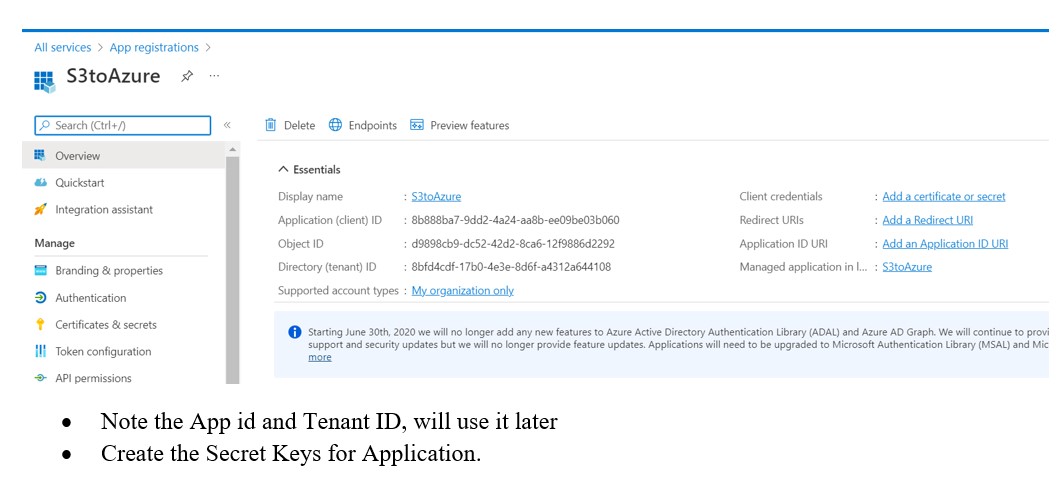

- Create the App registration(S3toAzure).

- Go to the Gen2 Storage Account:

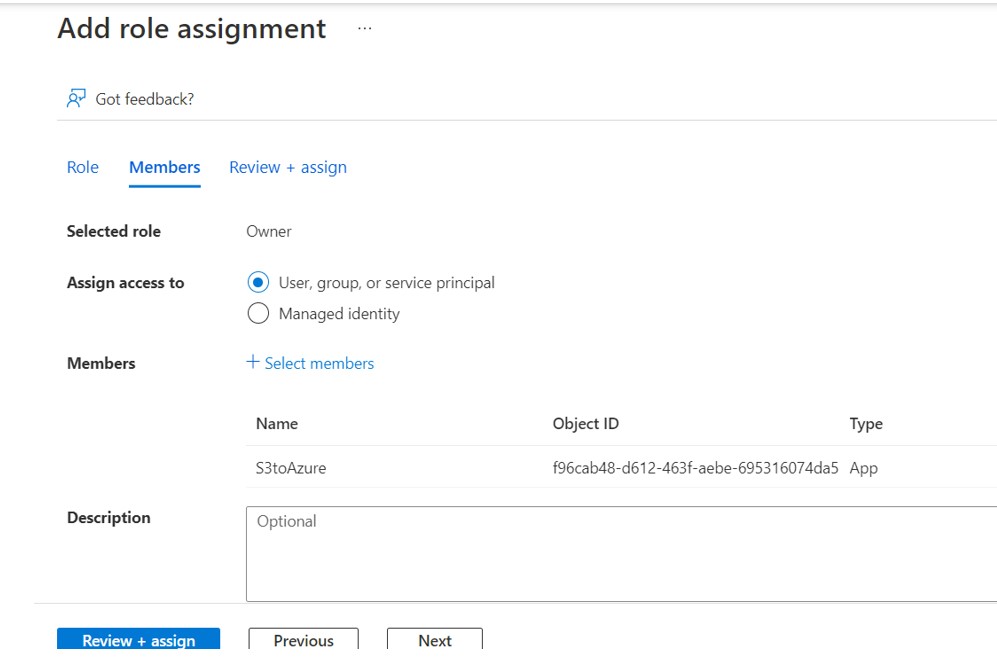

- Select IAM services

- Assign the Role(Owner) and application(S3toAzure) to the Storage Account

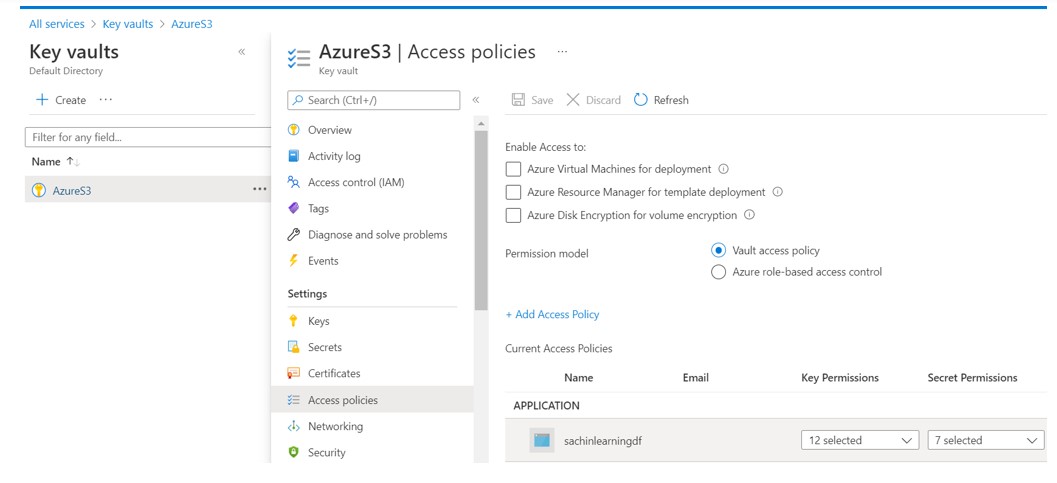

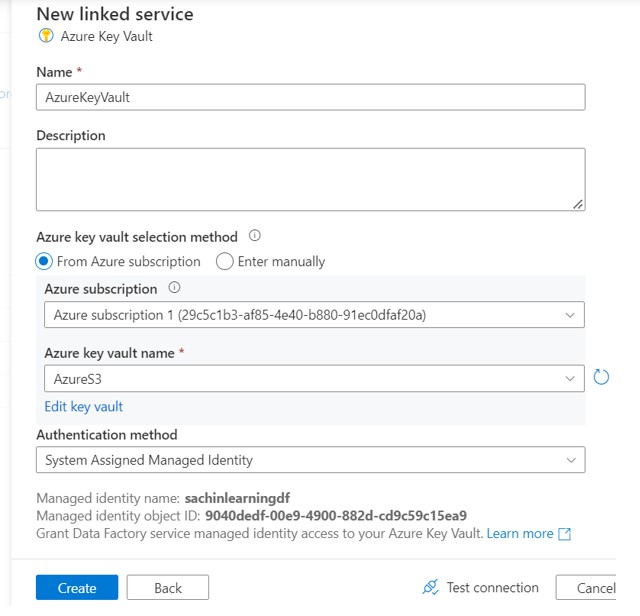

- We will be using Key vault in ADF pipeline so have to add ADF to Keyvault.

- Add your ADF to the Key vault

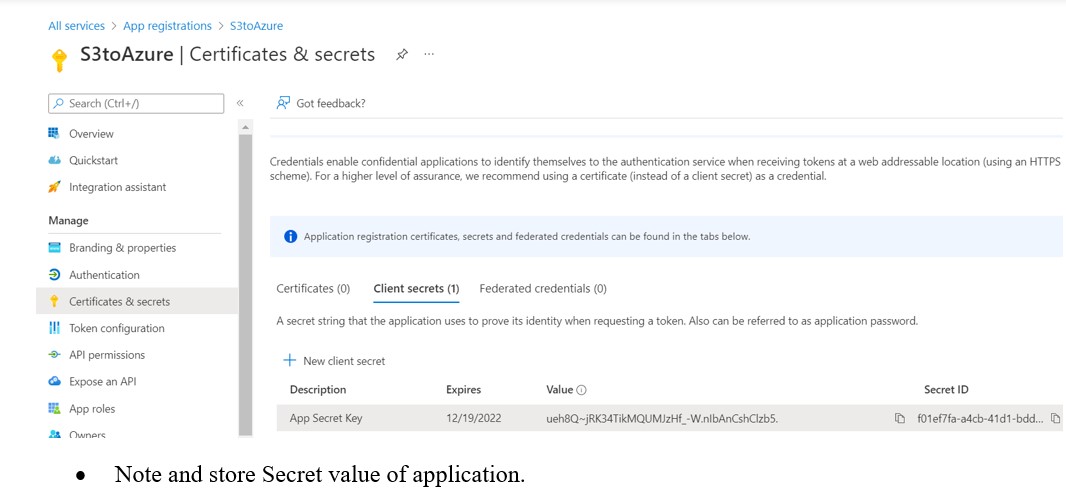

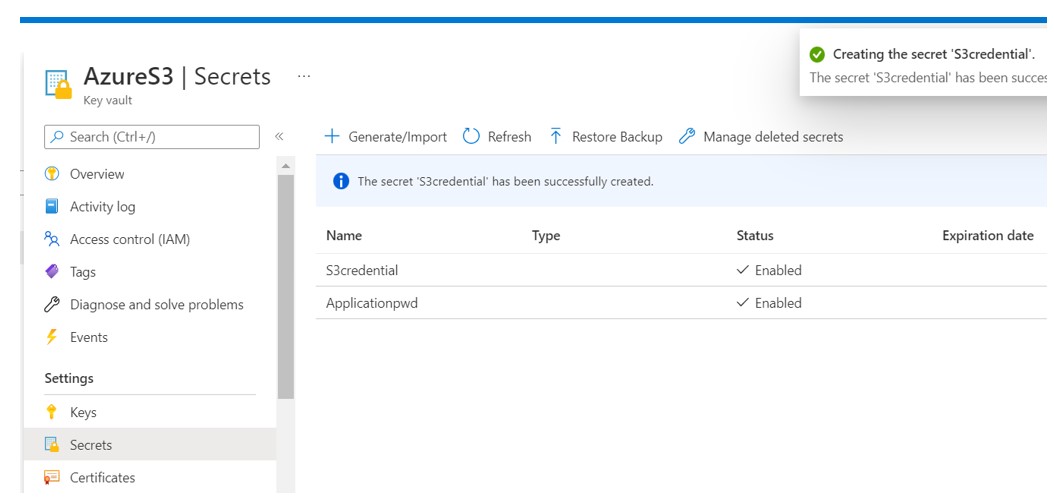

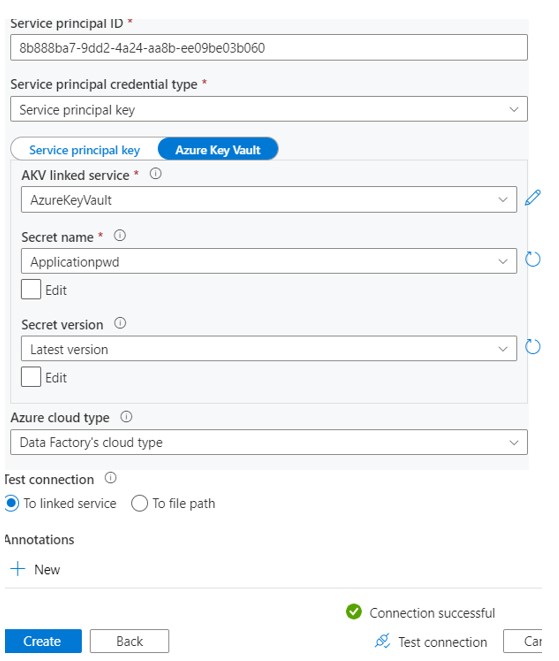

- Add the Secret key for App registration and AWS key in key vault by click on Generate/Import Button

- For application, use (secret value)

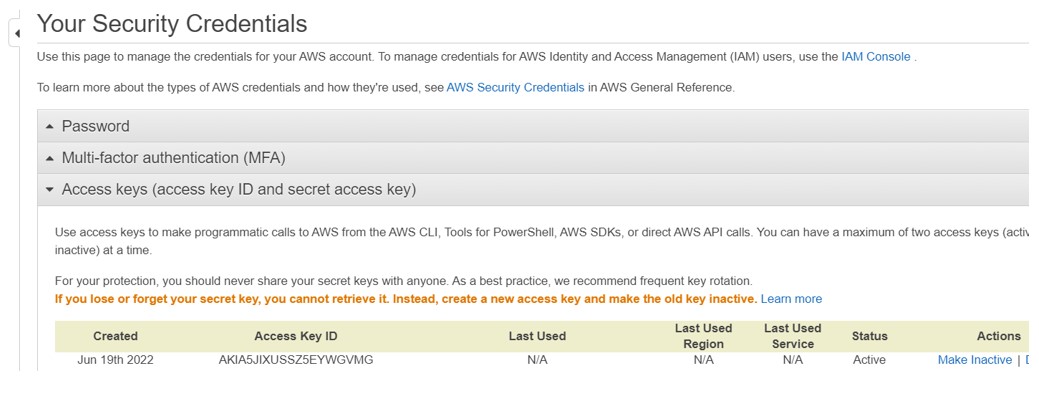

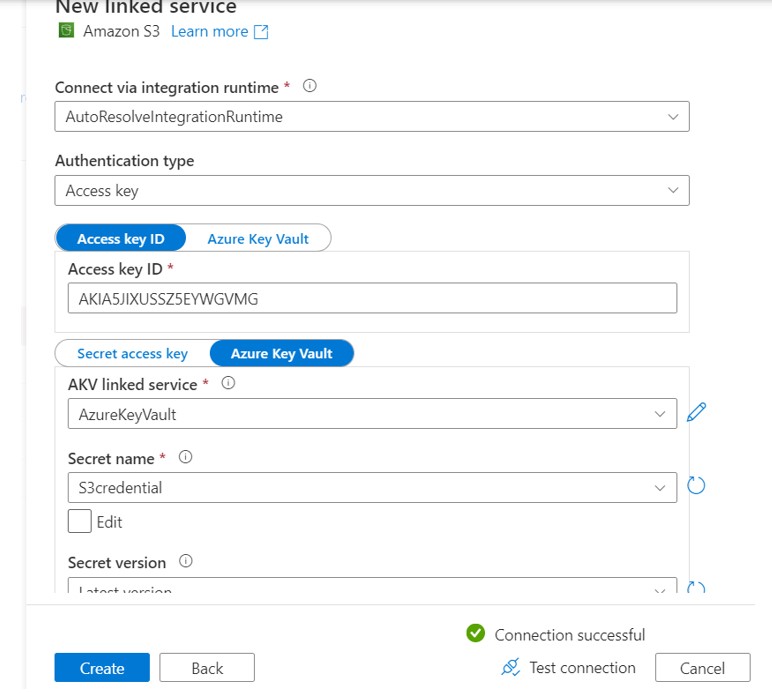

- For AWS, Use (Access key available in AWS account) . Generate the new Access key and copy the Secret Access Key (not Access Key Id).

Now create the Linked Services: KeyVault,S3,Gen2 services.

- Create Key Vault Linked Services.

- Create S3 Linked Services and refer the Azure Key Vault Linked Services for S3 credential.

- Create ADLS Gen2 Linked services and refer the Azure Key Vault Linked Services for Applicationpwd credential

LS Gen2

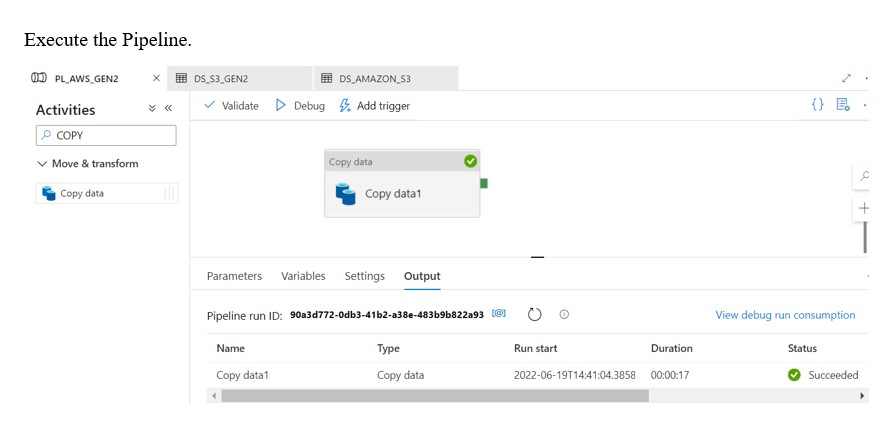

- Go to ADF and create the COPY activity.

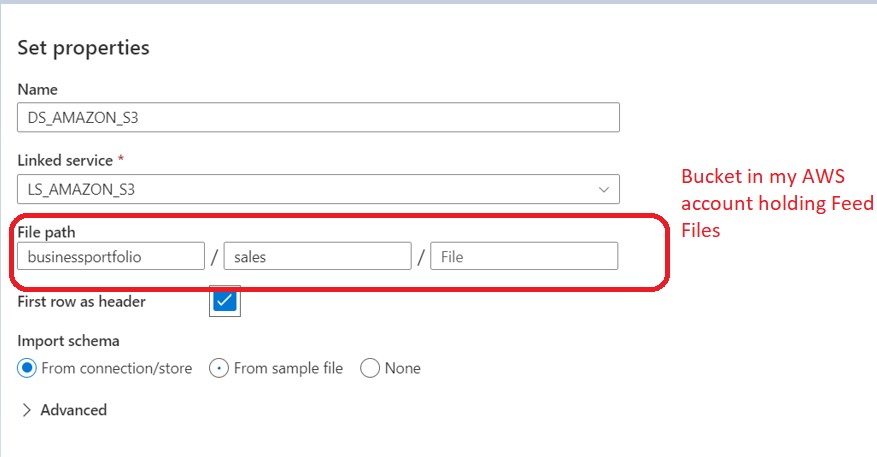

- In source, Create New Dataset and select S3 from data store

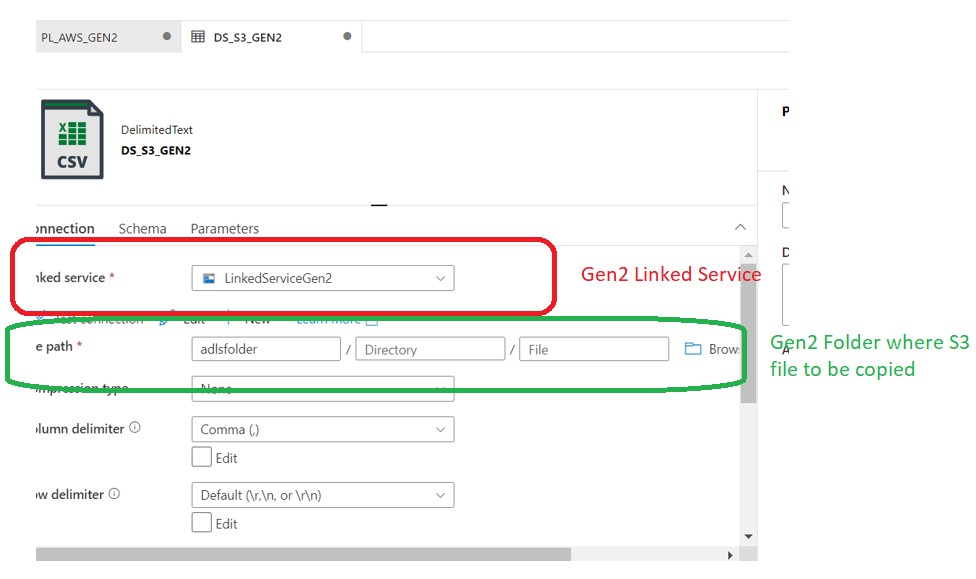

- In COPY Activity target, select GEN2 from data store.

It will load the files from S3 to Azure Gen2.

In next part ,w will see how to load data from Gen2 to Snowflake.