In this post, we will delve into the creation of a customized audit process for Snowflake COPY load. Building upon our previous discussion on automating the COPY process for loading a Snowflake table from an S3 bucket, where we dynamically generated the COPY command at runtime using a stored procedure. As an extension to this process, our goal is to capture the load statistics of each COPY operation in our audit table. While the COPY_HISTORY or LOAD_HISTORY views are available, their extensive data can sometimes pose challenges for efficient querying.

Furthermore, our audit framework is designed to specifically capture load statistics for a particular business module. Instead of querying the standard Snowflake views, developers or administrators can query their custom table to obtain the statistics of their COPY loads.

This would be complete technical post and I tried to use :

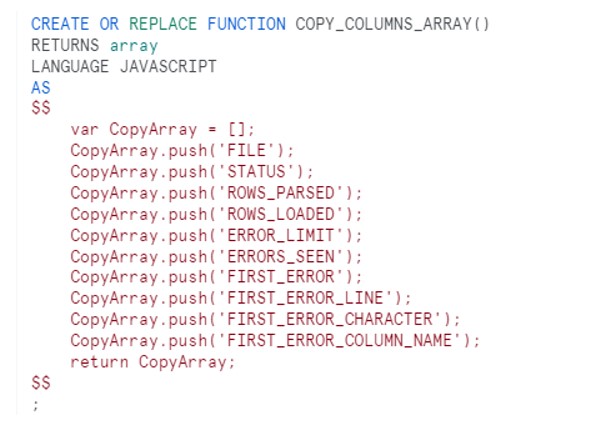

- Custom UDF, use an array to store COPY load columns.

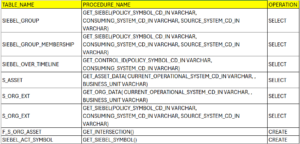

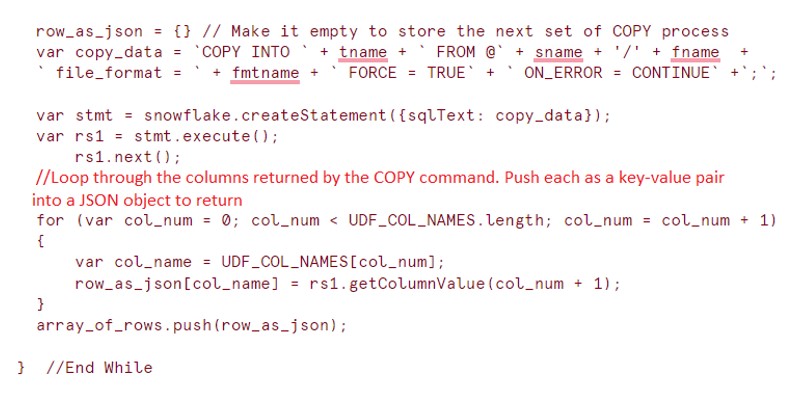

- Javascript procedure: Calls the Custom UDF and queries the Array columns. Push each as a key-value pair into a JSON object to return.

- Create AUDIT table at runtime and insert the ARRAY into Table.

- Flatten the AUDIT table and read data in Rows and columns.

Technical Implementation:

- Develop a UDF:

- Develop a stored procedure which will call the above UDF.

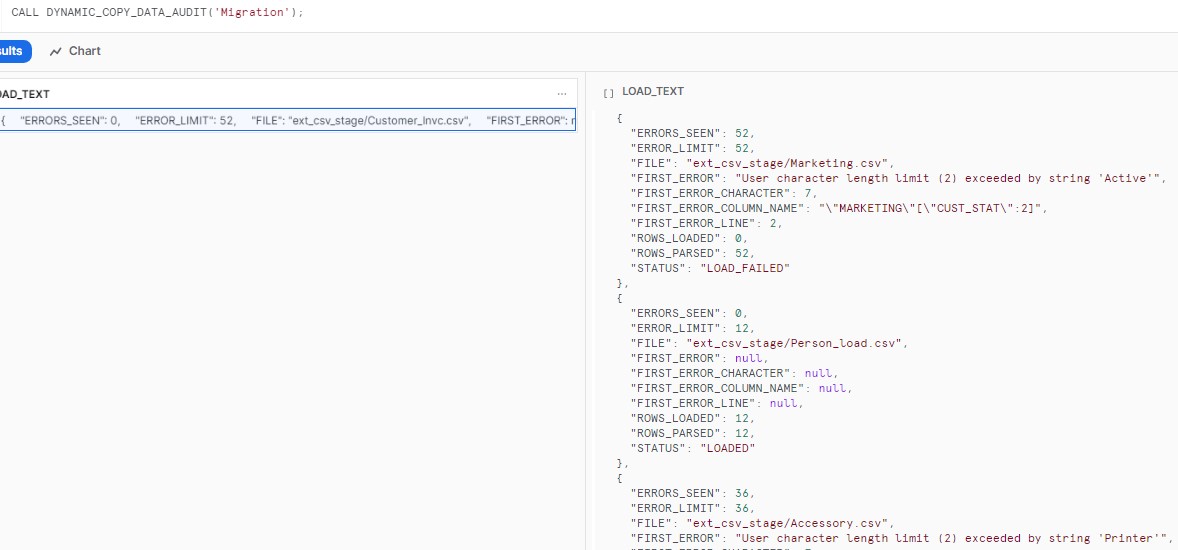

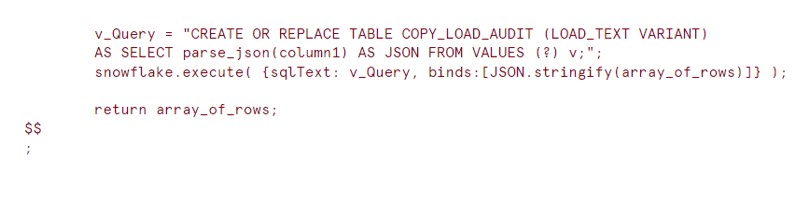

- Call the Stored procedure :

CALL DYNAMIC_COPY_DATA_AUDIT('Migration');

Please note below output of the proc:

- The procedure will create the AUDIT table and store the stats of COPY process.

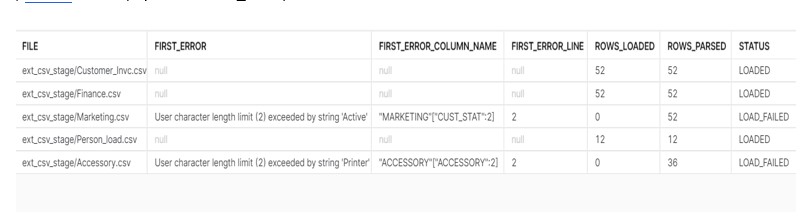

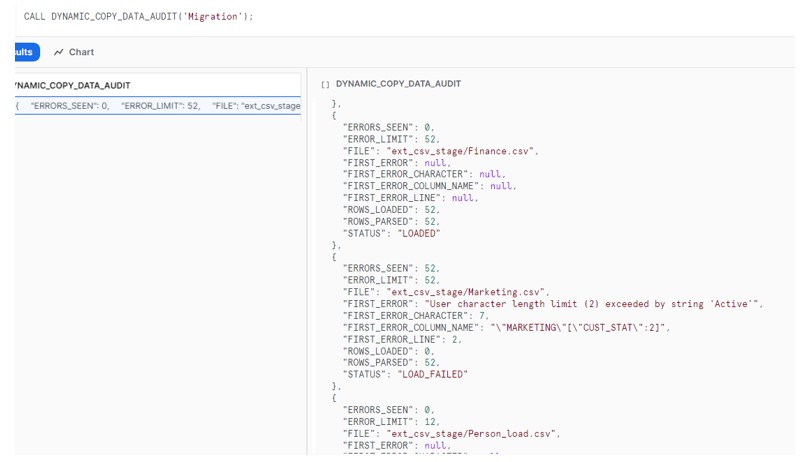

select * from COPY_LOAD_AUDIT;

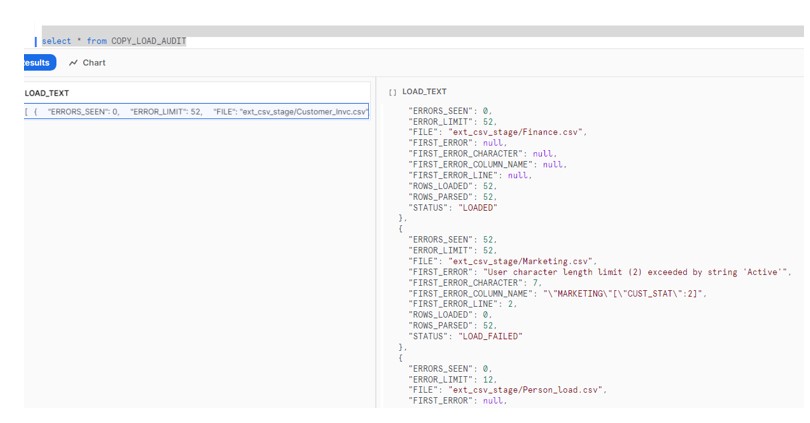

- Flatten the table to view the data.

select

value:FILE::string as FILE,

value:FIRST_ERROR::string as FIRST_ERROR,

value:FIRST_ERROR_COLUMN_NAME::string as FIRST_ERROR_COLUMN_NAME,

value:FIRST_ERROR_LINE::string as FIRST_ERROR_LINE,

value:ROWS_LOADED::string as ROWS_LOADED,

value:ROWS_PARSED::string as ROWS_PARSED,

value:STATUS::string as STATUS

FROM COPY_LOAD_AUDIT

, lateral flatten(input => LOAD_TEXT);