During this post we will discuss about Account Usage Schema that contains metadata about every object in your Snowflake account. ACCOUNT_USAGE, Views that display object metadata and usage metrics for your account. These views retain data history for 1 year and post 1 year we can’t retrieve any older data for and data comparisons or patterns. So what if have requirement to compare history data older than 1 year with current usage patterns. Hence there should be some mechanism where we can retain the metadata in history tables even 365 days elapsed.

Yes, we can think of the CLONE the ACCOUNT_USAGE schema but limitation is that SNOWFAKE Database is shared DB. Cloning does not work on shared DB and getting below error.

SQL compilation error: Cannot clone from a view object.

One of the approach we can follow is to periodically take a backup of ACCOUNT_USAGE views says every month or 3 month for future references.

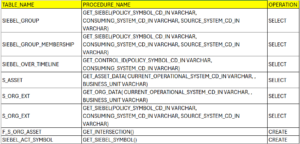

We have created the below sql scripting procedure, which exports the data into Custom history tables for below metadata tables:

- LOGIN_HISTORY

- COPY_HISTORY

- QUERY_HISTORY

Procedure works on the MERGE i.e. UPSERT logic, we have identified the few keys w.r.t each table and based on these keys Insert or Update happens to the history table.

Base on our need we can include more tables to this process.

Note: There can be multiple approach or better ways to take the backup of metadata tables but this works for our scenario.

CREATE TABLE IF NOT EXISTS LOGIN_HISTORY

AS

SELECT

EVENT_ID,

EVENT_TIMESTAMP,

EVENT_TYPE,

USER_NAME,

CLIENT_IP,

REPORTED_CLIENT_TYPE,

REPORTED_CLIENT_VERSION,

FIRST_AUTHENTICATION_FACTOR,

SECOND_AUTHENTICATION_FACTOR,

IS_SUCCESS,

ERROR_CODE,

ERROR_MESSAGE,

RELATED_EVENT_ID

FROM SNOWFLAKE.ACCOUNT_USAGE.LOGIN_HISTORY

WHERE 1 = 0;

CREATE TABLE IF NOT EXISTS COPY_HISTORY

AS

SELECT

FILE_NAME,

STAGE_LOCATION,

LAST_LOAD_TIME,

ROW_COUNT,

ROW_PARSED,

FILE_SIZE,

FIRST_ERROR_MESSAGE,

FIRST_ERROR_LINE_NUMBER,

ERROR_COUNT,

ERROR_LIMIT,

STATUS,

TABLE_ID,

TABLE_NAME,

TABLE_SCHEMA_NAME,

TABLE_CATALOG_NAME,

PIPE_CATALOG_NAME,

PIPE_SCHEMA_NAME,

PIPE_NAME

FROM SNOWFLAKE.ACCOUNT_USAGE.COPY_HISTORY

WHERE 1 = 0;

CREATE TABLE IF NOT EXISTS QUERY_HISTORY

AS

SELECT

QUERY_ID, QUERY_TEXT, DATABASE_ID, DATABASE_NAME, SCHEMA_ID, SCHEMA_NAME, QUERY_TYPE, SESSION_ID, USER_NAME, ROLE_NAME, WAREHOUSE_ID,

WAREHOUSE_NAME, WAREHOUSE_SIZE, WAREHOUSE_TYPE, CLUSTER_NUMBER, QUERY_TAG, EXECUTION_STATUS, ERROR_CODE, ERROR_MESSAGE, START_TIME, END_TIME,

TOTAL_ELAPSED_TIME, BYTES_SCANNED, PERCENTAGE_SCANNED_FROM_CACHE, BYTES_WRITTEN, BYTES_WRITTEN_TO_RESULT, BYTES_READ_FROM_RESULT, ROWS_PRODUCED,

ROWS_INSERTED, ROWS_UPDATED, ROWS_DELETED, ROWS_UNLOADED, BYTES_DELETED, PARTITIONS_SCANNED, PARTITIONS_TOTAL, BYTES_SPILLED_TO_LOCAL_STORAGE,

BYTES_SPILLED_TO_REMOTE_STORAGE, BYTES_SENT_OVER_THE_NETWORK, COMPILATION_TIME, EXECUTION_TIME, QUEUED_PROVISIONING_TIME, QUEUED_REPAIR_TIME,

QUEUED_OVERLOAD_TIME, TRANSACTION_BLOCKED_TIME, OUTBOUND_DATA_TRANSFER_CLOUD, OUTBOUND_DATA_TRANSFER_REGION, OUTBOUND_DATA_TRANSFER_BYTES,

INBOUND_DATA_TRANSFER_CLOUD, INBOUND_DATA_TRANSFER_REGION, INBOUND_DATA_TRANSFER_BYTES, LIST_EXTERNAL_FILES_TIME, CREDITS_USED_CLOUD_SERVICES,

RELEASE_VERSION, EXTERNAL_FUNCTION_TOTAL_INVOCATIONS, EXTERNAL_FUNCTION_TOTAL_SENT_ROWS,

EXTERNAL_FUNCTION_TOTAL_RECEIVED_ROWS, EXTERNAL_FUNCTION_TOTAL_SENT_BYTES, EXTERNAL_FUNCTION_TOTAL_RECEIVED_BYTES, QUERY_LOAD_PERCENT, IS_CLIENT_GENERATED_STATEMENT

FROM SNOWFLAKE.ACCOUNT_USAGE.QUERY_HISTORY

WHERE 1 = 0;

Procedure:

create or replace procedure metadata_extract_jobs_info(table_name varchar)

returns varchar

execute as owner as

$$

declare

num_rec integer default 0;

ret_status string default '';

begin

select "COUNT(1)" into :num_rec from table(result_scan(last_query_id()));

if (:num_rec > 0 AND :table_name = 'LOGIN_HISTORY') THEN

let query_to_run varchar := 'MERGE INTO ' || :table_name || ' DB_HIST USING (

SELECT EVENT_TIMESTAMP, EVENT_ID, EVENT_TYPE, USER_NAME, CLIENT_IP, REPORTED_CLIENT_TYPE, REPORTED_CLIENT_VERSION,

FIRST_AUTHENTICATION_FACTOR, SECOND_AUTHENTICATION_FACTOR, IS_SUCCESS, ERROR_CODE, ERROR_MESSAGE, RELATED_EVENT_ID

FROM SNOWFLAKE.ACCOUNT_USAGE.LOGIN_HISTORY

) LGN_HIST ON LGN_HIST.EVENT_ID = DB_HIST.EVENT_ID AND LGN_HIST.EVENT_TIMESTAMP = DB_HIST.EVENT_TIMESTAMP

AND LGN_HIST.EVENT_TYPE = DB_HIST.EVENT_TYPE

WHEN NOT MATCHED THEN

INSERT

(

EVENT_TIMESTAMP, EVENT_ID, EVENT_TYPE, USER_NAME, CLIENT_IP, REPORTED_CLIENT_TYPE, REPORTED_CLIENT_VERSION, FIRST_AUTHENTICATION_FACTOR,

SECOND_AUTHENTICATION_FACTOR, IS_SUCCESS, ERROR_CODE, ERROR_MESSAGE, RELATED_EVENT_ID

) VALUES

(

LGN_HIST.EVENT_TIMESTAMP, LGN_HIST.EVENT_ID, LGN_HIST.EVENT_TYPE, LGN_HIST.USER_NAME, LGN_HIST.CLIENT_IP, LGN_HIST.REPORTED_CLIENT_TYPE,

LGN_HIST.REPORTED_CLIENT_VERSION, LGN_HIST.FIRST_AUTHENTICATION_FACTOR, LGN_HIST.SECOND_AUTHENTICATION_FACTOR, LGN_HIST.IS_SUCCESS,

LGN_HIST.ERROR_CODE, LGN_HIST.ERROR_MESSAGE, LGN_HIST.RELATED_EVENT_ID

);';

execute immediate :query_to_run;

select "number of rows inserted" into :num_rec from table(result_scan(last_query_id()));

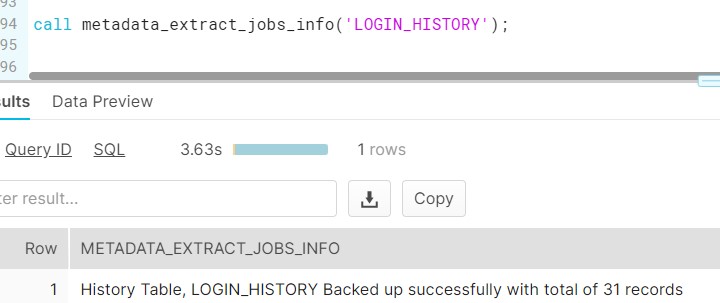

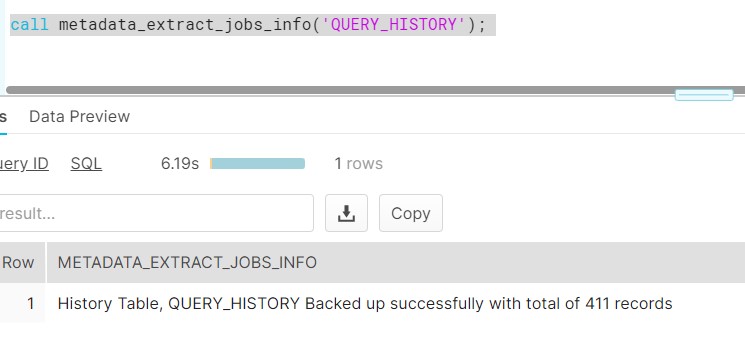

ret_status := 'History Table, ' || :table_name || ' Backed up successfully with total of ' || :num_rec || ' records';

return ret_status;

END IF;

if (:num_rec > 0 AND :table_name = 'COPY_HISTORY') THEN

let query_to_run varchar := 'MERGE INTO ' || :table_name || ' DB_HIST USING (

SELECT FILE_NAME, STAGE_LOCATION, LAST_LOAD_TIME, ROW_COUNT, ROW_PARSED, FILE_SIZE, FIRST_ERROR_MESSAGE, FIRST_ERROR_LINE_NUMBER, ERROR_COUNT,

ERROR_LIMIT, STATUS, TABLE_ID, TABLE_NAME,TABLE_SCHEMA_NAME, TABLE_CATALOG_NAME,PIPE_CATALOG_NAME,PIPE_SCHEMA_NAME,PIPE_NAME

FROM SNOWFLAKE.ACCOUNT_USAGE.COPY_HISTORY

) CPY_HIST ON CPY_HIST.FILE_NAME = DB_HIST.FILE_NAME AND CPY_HIST.TABLE_ID = DB_HIST.TABLE_ID

WHEN NOT MATCHED THEN

INSERT

(

FILE_NAME, STAGE_LOCATION, LAST_LOAD_TIME, ROW_COUNT, ROW_PARSED, FILE_SIZE, FIRST_ERROR_MESSAGE, FIRST_ERROR_LINE_NUMBER, ERROR_COUNT,

ERROR_LIMIT, STATUS, TABLE_ID, TABLE_NAME,TABLE_SCHEMA_NAME, TABLE_CATALOG_NAME,PIPE_CATALOG_NAME,PIPE_SCHEMA_NAME,PIPE_NAME

) VALUES

(

FILE_NAME, STAGE_LOCATION, LAST_LOAD_TIME, ROW_COUNT, ROW_PARSED, FILE_SIZE, FIRST_ERROR_MESSAGE, FIRST_ERROR_LINE_NUMBER, ERROR_COUNT,

ERROR_LIMIT, STATUS, TABLE_ID, TABLE_NAME,TABLE_SCHEMA_NAME, TABLE_CATALOG_NAME,PIPE_CATALOG_NAME,PIPE_SCHEMA_NAME,PIPE_NAME

);';

execute immediate :query_to_run;

select "number of rows inserted" into :num_rec from table(result_scan(last_query_id()));

ret_status := 'History Table, ' || :table_name || ' Backed up successfully with total of ' || :num_rec || ' records';

return ret_status;

END IF;

if (:num_rec > 0 AND :table_name = 'QUERY_HISTORY') THEN

let query_to_run varchar := 'MERGE INTO ' || :table_name || ' DB_HIST USING (

SELECT QUERY_ID, QUERY_TEXT, DATABASE_ID, DATABASE_NAME, SCHEMA_ID, SCHEMA_NAME, QUERY_TYPE, SESSION_ID, USER_NAME, ROLE_NAME, WAREHOUSE_ID,

WAREHOUSE_NAME, WAREHOUSE_SIZE, WAREHOUSE_TYPE, CLUSTER_NUMBER, QUERY_TAG, EXECUTION_STATUS, ERROR_CODE, ERROR_MESSAGE, START_TIME, END_TIME,

TOTAL_ELAPSED_TIME, BYTES_SCANNED, PERCENTAGE_SCANNED_FROM_CACHE, BYTES_WRITTEN, BYTES_WRITTEN_TO_RESULT, BYTES_READ_FROM_RESULT, ROWS_PRODUCED,

ROWS_INSERTED, ROWS_UPDATED, ROWS_DELETED, ROWS_UNLOADED, BYTES_DELETED, PARTITIONS_SCANNED, PARTITIONS_TOTAL, BYTES_SPILLED_TO_LOCAL_STORAGE,

BYTES_SPILLED_TO_REMOTE_STORAGE, BYTES_SENT_OVER_THE_NETWORK, COMPILATION_TIME, EXECUTION_TIME, QUEUED_PROVISIONING_TIME, QUEUED_REPAIR_TIME,

QUEUED_OVERLOAD_TIME, TRANSACTION_BLOCKED_TIME, OUTBOUND_DATA_TRANSFER_CLOUD, OUTBOUND_DATA_TRANSFER_REGION, OUTBOUND_DATA_TRANSFER_BYTES,

INBOUND_DATA_TRANSFER_CLOUD, INBOUND_DATA_TRANSFER_REGION, INBOUND_DATA_TRANSFER_BYTES, LIST_EXTERNAL_FILES_TIME, CREDITS_USED_CLOUD_SERVICES,

RELEASE_VERSION, EXTERNAL_FUNCTION_TOTAL_INVOCATIONS, EXTERNAL_FUNCTION_TOTAL_SENT_ROWS,

EXTERNAL_FUNCTION_TOTAL_RECEIVED_ROWS, EXTERNAL_FUNCTION_TOTAL_SENT_BYTES, EXTERNAL_FUNCTION_TOTAL_RECEIVED_BYTES, QUERY_LOAD_PERCENT, IS_CLIENT_GENERATED_STATEMENT

FROM SNOWFLAKE.ACCOUNT_USAGE.QUERY_HISTORY

) QRY_HIST ON QRY_HIST.QUERY_TEXT = DB_HIST.QUERY_TEXT

AND QRY_HIST.DATABASE_ID = DB_HIST.DATABASE_ID

AND QRY_HIST.SCHEMA_ID = DB_HIST.SCHEMA_ID

AND QRY_HIST.QUERY_TYPE = DB_HIST.QUERY_TYPE

AND QRY_HIST.DATABASE_ID = DB_HIST.DATABASE_ID

WHEN NOT MATCHED THEN

INSERT

(

QUERY_ID, QUERY_TEXT, DATABASE_ID, DATABASE_NAME, SCHEMA_ID, SCHEMA_NAME, QUERY_TYPE, SESSION_ID, USER_NAME, ROLE_NAME, WAREHOUSE_ID,

WAREHOUSE_NAME, WAREHOUSE_SIZE, WAREHOUSE_TYPE, CLUSTER_NUMBER, QUERY_TAG, EXECUTION_STATUS, ERROR_CODE, ERROR_MESSAGE, START_TIME, END_TIME,

TOTAL_ELAPSED_TIME, BYTES_SCANNED, PERCENTAGE_SCANNED_FROM_CACHE, BYTES_WRITTEN, BYTES_WRITTEN_TO_RESULT, BYTES_READ_FROM_RESULT, ROWS_PRODUCED,

ROWS_INSERTED, ROWS_UPDATED, ROWS_DELETED, ROWS_UNLOADED, BYTES_DELETED, PARTITIONS_SCANNED, PARTITIONS_TOTAL, BYTES_SPILLED_TO_LOCAL_STORAGE,

BYTES_SPILLED_TO_REMOTE_STORAGE, BYTES_SENT_OVER_THE_NETWORK, COMPILATION_TIME, EXECUTION_TIME, QUEUED_PROVISIONING_TIME, QUEUED_REPAIR_TIME,

QUEUED_OVERLOAD_TIME, TRANSACTION_BLOCKED_TIME, OUTBOUND_DATA_TRANSFER_CLOUD, OUTBOUND_DATA_TRANSFER_REGION, OUTBOUND_DATA_TRANSFER_BYTES,

INBOUND_DATA_TRANSFER_CLOUD, INBOUND_DATA_TRANSFER_REGION, INBOUND_DATA_TRANSFER_BYTES, LIST_EXTERNAL_FILES_TIME, CREDITS_USED_CLOUD_SERVICES,

RELEASE_VERSION, EXTERNAL_FUNCTION_TOTAL_INVOCATIONS, EXTERNAL_FUNCTION_TOTAL_SENT_ROWS, EXTERNAL_FUNCTION_TOTAL_RECEIVED_ROWS, EXTERNAL_FUNCTION_TOTAL_SENT_BYTES, EXTERNAL_FUNCTION_TOTAL_RECEIVED_BYTES, QUERY_LOAD_PERCENT, IS_CLIENT_GENERATED_STATEMENT

) VALUES

(

QUERY_ID, QUERY_TEXT, DATABASE_ID, DATABASE_NAME, SCHEMA_ID, SCHEMA_NAME, QUERY_TYPE, SESSION_ID, USER_NAME, ROLE_NAME, WAREHOUSE_ID,

WAREHOUSE_NAME, WAREHOUSE_SIZE, WAREHOUSE_TYPE, CLUSTER_NUMBER, QUERY_TAG, EXECUTION_STATUS, ERROR_CODE, ERROR_MESSAGE, START_TIME, END_TIME,

TOTAL_ELAPSED_TIME, BYTES_SCANNED, PERCENTAGE_SCANNED_FROM_CACHE, BYTES_WRITTEN, BYTES_WRITTEN_TO_RESULT, BYTES_READ_FROM_RESULT, ROWS_PRODUCED,

ROWS_INSERTED, ROWS_UPDATED, ROWS_DELETED, ROWS_UNLOADED, BYTES_DELETED, PARTITIONS_SCANNED, PARTITIONS_TOTAL, BYTES_SPILLED_TO_LOCAL_STORAGE,

BYTES_SPILLED_TO_REMOTE_STORAGE, BYTES_SENT_OVER_THE_NETWORK, COMPILATION_TIME, EXECUTION_TIME, QUEUED_PROVISIONING_TIME, QUEUED_REPAIR_TIME,

QUEUED_OVERLOAD_TIME, TRANSACTION_BLOCKED_TIME, OUTBOUND_DATA_TRANSFER_CLOUD, OUTBOUND_DATA_TRANSFER_REGION, OUTBOUND_DATA_TRANSFER_BYTES,

INBOUND_DATA_TRANSFER_CLOUD, INBOUND_DATA_TRANSFER_REGION, INBOUND_DATA_TRANSFER_BYTES, LIST_EXTERNAL_FILES_TIME, CREDITS_USED_CLOUD_SERVICES,

RELEASE_VERSION, EXTERNAL_FUNCTION_TOTAL_INVOCATIONS, EXTERNAL_FUNCTION_TOTAL_SENT_ROWS,

EXTERNAL_FUNCTION_TOTAL_RECEIVED_ROWS, EXTERNAL_FUNCTION_TOTAL_SENT_BYTES, EXTERNAL_FUNCTION_TOTAL_RECEIVED_BYTES, QUERY_LOAD_PERCENT, IS_CLIENT_GENERATED_STATEMENT

);';

execute immediate :query_to_run;

select "number of rows inserted" into :num_rec from table(result_scan(last_query_id()));

ret_status := 'History Table, ' || :table_name || ' Backed up successfully with total of ' || :num_rec || ' records';

return ret_status;

END IF;

exception

when statement_error then

return object_construct('Error type', 'STATEMENT_ERROR',

'SQLCODE', sqlcode,

'SQLERRM', sqlerrm,

'SQLSTATE', sqlstate);

when other then

return object_construct('Error type', 'Other error',

'SQLCODE', sqlcode,

'SQLERRM', sqlerrm,

'SQLSTATE', sqlstate);

end;

$$;

Execute the Procedure:

Verify the data inside the below custom history tables.

SELECT * FROM LOGIN_HISTORY;

SELECT * FROM COPY_HISTORY;

SELECT * FROM QUERY_HISTORY;