During last post , we talked about Salesforce Integration with AWS via Amazon AppFlow service where no coding was required.

Though it was cost effective solution and no coding was required at any step. Also it was just few clicks solution and you will get the data from SAAS system i.e. Salesforce to the Cloud system i.e. AWS. But easy things not come at free and the customers has to pay for the number of flows they run.

Please find below the pricing mentioned by Amazon services for the data processing of the flows whose destinations are hosted on AWS site i.e. : $0.02 per GB.

Price per flow for each run $0.001.

Below is the pricing example quote on AWS site by the experts:

Processes 10,000 records between Salesforce and Amazon Redshift daily for a month with each transfer updating 10 records and processing 10KB of data results monthly cost is $30.01.

Refer: https://lnkd.in/dvPDUPf

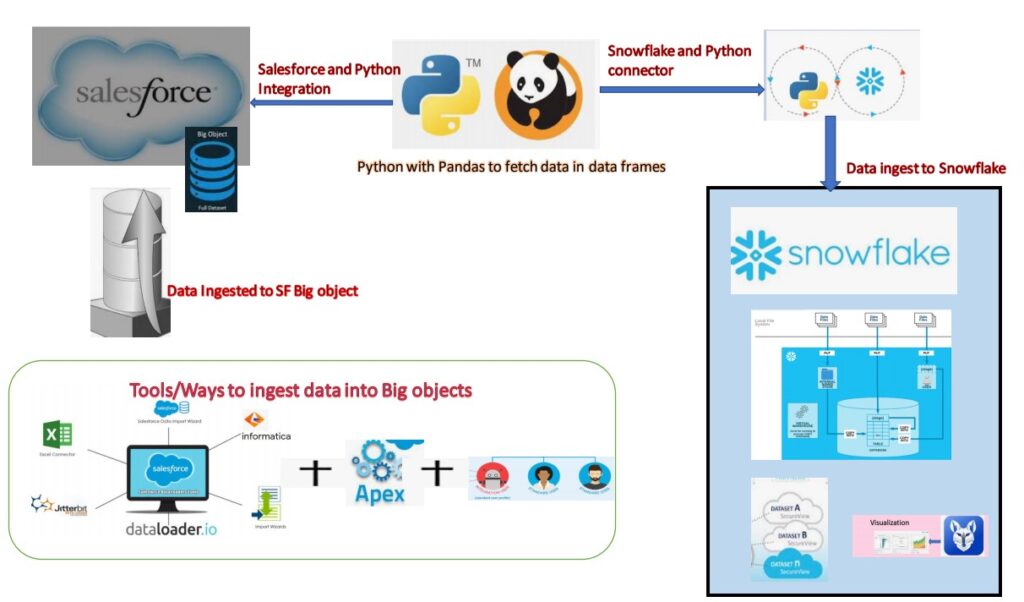

Enough theory: Now as part of the below Architecture, we are eliminating the AWS Appflow :

Using Python Framework to extract the data from Salesforce lies in the BIG OBJECTS. Not going into the detail of Big objects as Salesforce people better knows than me. But for new ones BIG OBJECTS, provides data storage that does not count against the storage limit, Manage massive data within Salesforce without affecting performances.

In my next part of this post ,will try to implement the attached architecture and publish the steps with performance respective and Pseudo code.