SSE File Encryption: During this post we will discuss an ERROR while executing the COPY command. Recently we got an issue while loading data from S3 bucket to Snowflake. According to the scenario, there were two files present in the bucket but surprisingly COPY command was failing to process one File. The command was reporting Access denied error for particular file. The same COPY command was working fine for another file present in same bucket. We were getting the below error while importing file to snowflake:

Failed to access remote file: access denied. Please check your credentials

We tried to compare the permissions for both file but nothing was different. While Comparing we found one of the file has Server-side encryption enabled. And this is the file producing error while processing.

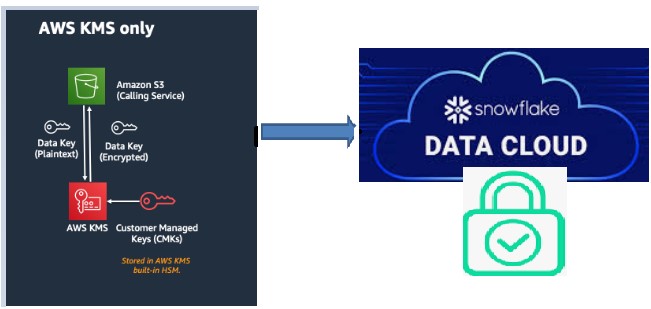

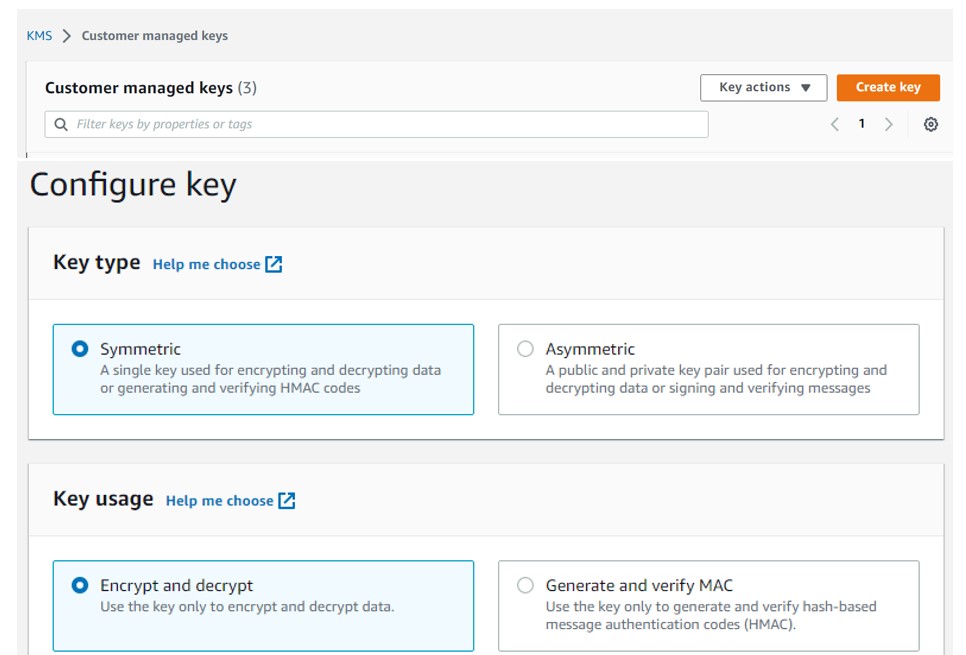

To replicate the same behavior we have created the Encryption Key using KMS (Key Management System) in AWS.

Note: We can create Customer Managed Keys using two approaches:

- AWS CLI Console

- AWS UI Console

CLI Console

- Create key using CLI

aws kms create-key;

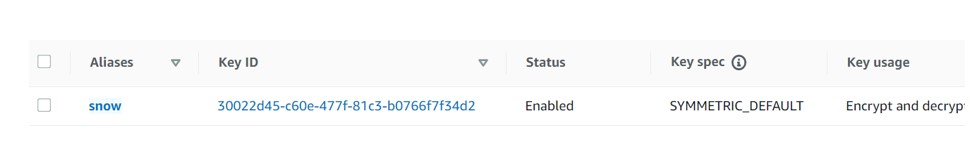

- Create the Alias name to make Key id simpler in reading and using.

- aws kms create-alias –alias-name “alias/snow” –target-key-id “df2ab8ae-7d26-4f3c-b9ea-ecce907a21ce”

AWS UI Console:

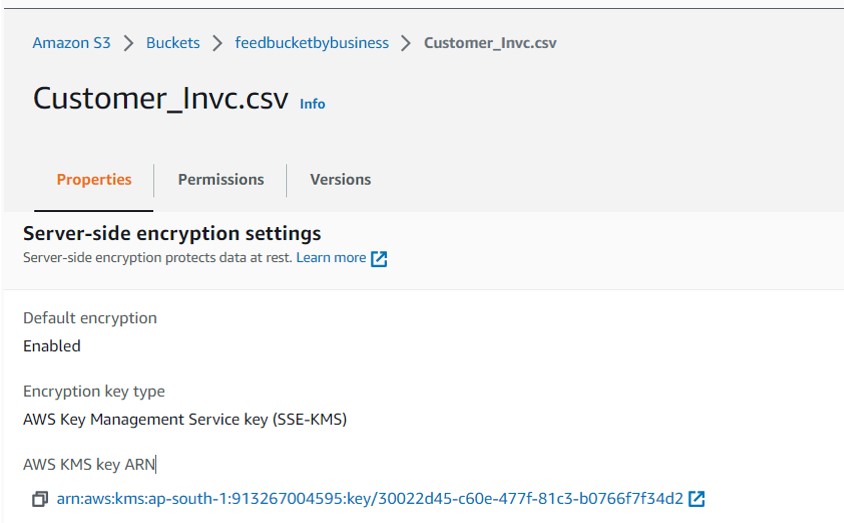

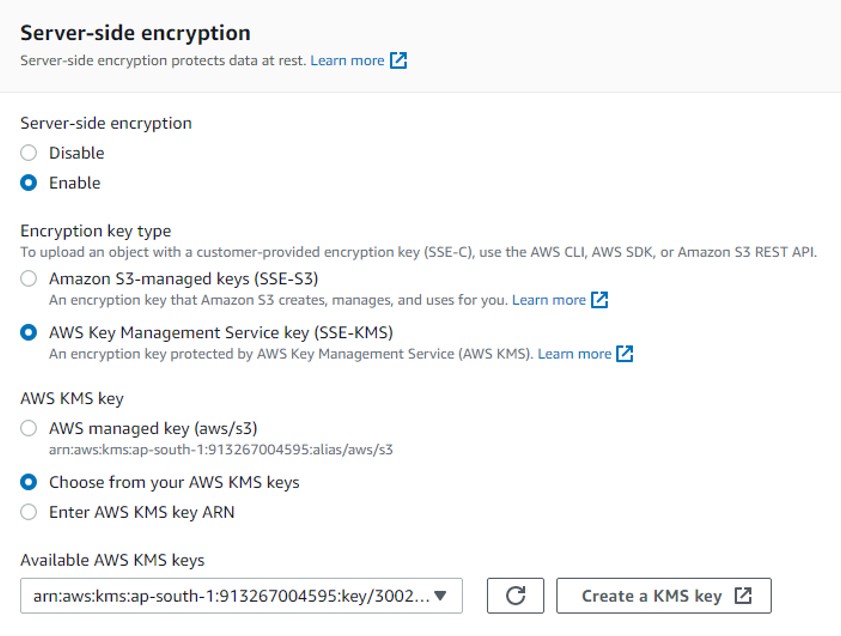

Enable the SSE (Server Side Encryption) on existing File in the Bucket.

- Go to the Bucket->Click on your File.

- Find Server Side encryption settings.

Select your Key generated in above step.

Snowflake:

Snowflake:

create or replace storage integration s3_int

type = external_stage

storage_provider = s3

enabled = true

storage_aws_role_arn = 'arn:aws:iam::913267004595:role/testsnowflake'

storage_allowed_locations = ('s3://collectionorgbucket/','s3://collectionorgdelta/','s3://feedbucketbybusiness/');

create or replace file format demo_db.public.csv_format_sample

type = csv

skip_header = 1

null_if = ('NULL', 'null')

empty_field_as_null = true;

create or replace stage demo_db.public.ext_csv_stage_sam

URL = 's3://feedbucketbybusiness/'

STORAGE_INTEGRATION = s3_int

file_format = demo_db.public.csv_format_sample;

create or replace table prod_tbl (

CUST_NUM varchar(8) null,

CUST_STAT varchar(255) null,

CUST_BAL number(20) null,

INV_NO varchar(10) null,

INV_AMT number(10) null,

CRID VARCHAR(4),

SSN VARCHAR(10),

phone number(10),

Email VARCHAR(50)

);

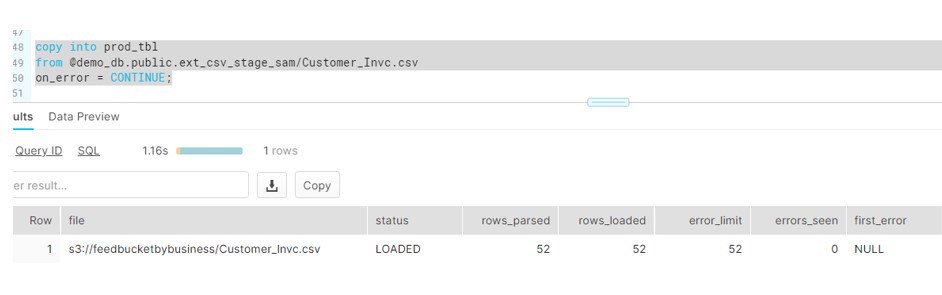

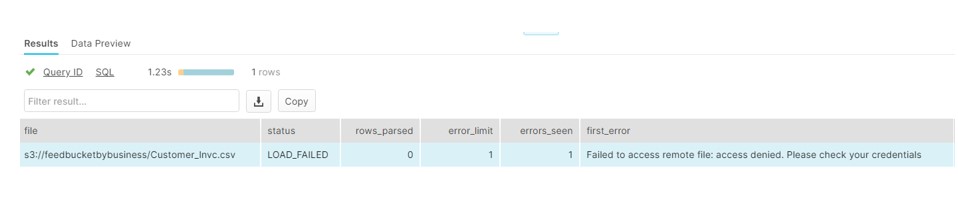

copy into prod_tbl

from @demo_db.public.ext_csv_stage_sam/Customer_Invc.csv

on_error = CONTINUE;

This will produce the below error:

We have enabled the key but not associated to the Stage. Replace the stage with below command. (Use the Key ID from AWS Console)

create or replace stage demo_db.public.ext_csv_stage_sam

URL = 's3://feedbucketbybusiness/'

STORAGE_INTEGRATION = s3_int

file_format = demo_db.public.csv_format_sample

encryption=(TYPE = 'AWS_SSE_KMS',KMS_KEY_ID='30022d45-c60e-477f-81c3-b0766f7f34d2');

At this point we have enabled the SSE and associate it to the Stage. But the Integration Role which is accessing the stage is not allowed to use the CMK. So we need to tag Role (testsnowflake) with the CMK in KMS console.

KMS Console

- Go to your key and find Key users.

- Add the Role you used in your Storage Integration.

Now try to load the file now and it will load successfully.