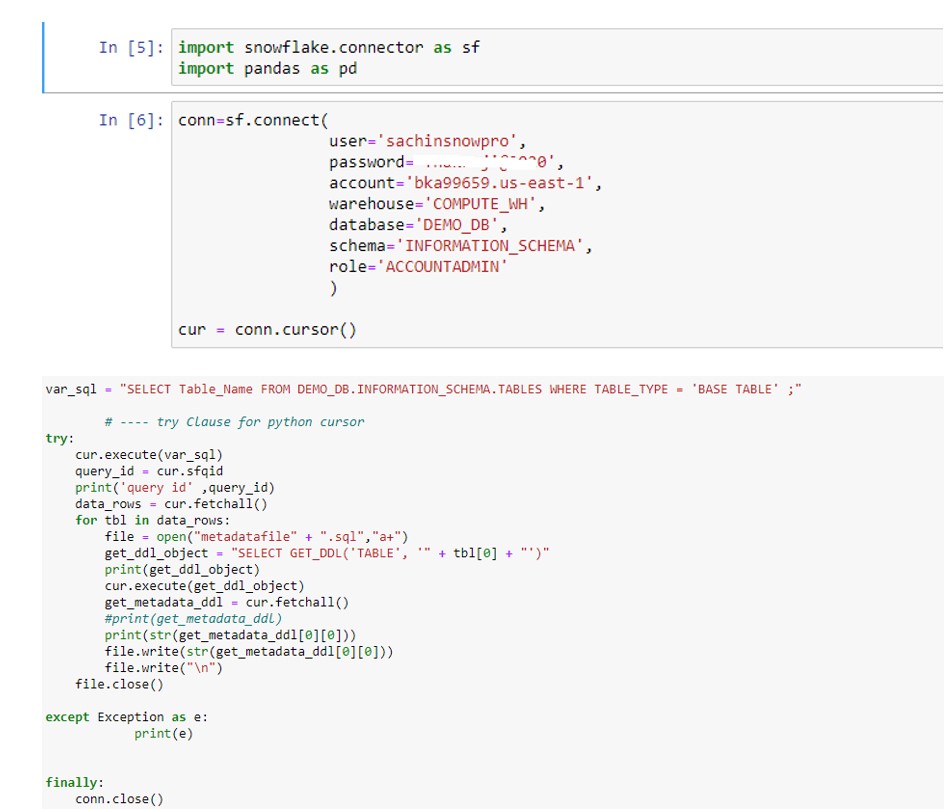

Recently during discussion with my colleague one of the requirement came up where we need to extract the DDL of entire user defined tables in specific database. Initially the requirement seems simple and we can run the plain GET_DDL command on the database. Later on we can export the output in a file. But what if ,the database contains say 100 tables and running the GET_DDL command for every individual table is not a feasible solution.

There should be some automated way which could traverse the list of User defined tables in the particular database and run the DDL command for every table. Also this process would export the DDL of all extracted table in to a .sql file which we can share to other team or DB and they have to execute this .sql file with no manual intervention.

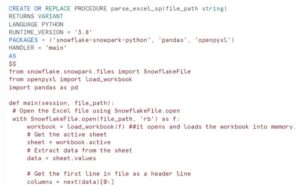

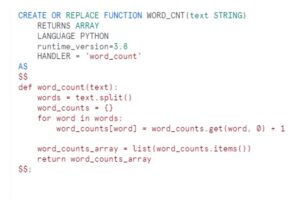

Though there can be multiple ways to accomplish this requirement, but we have implemented Python to achieve the desired result.

var_sql : extract the custom tables name from Information Schema.

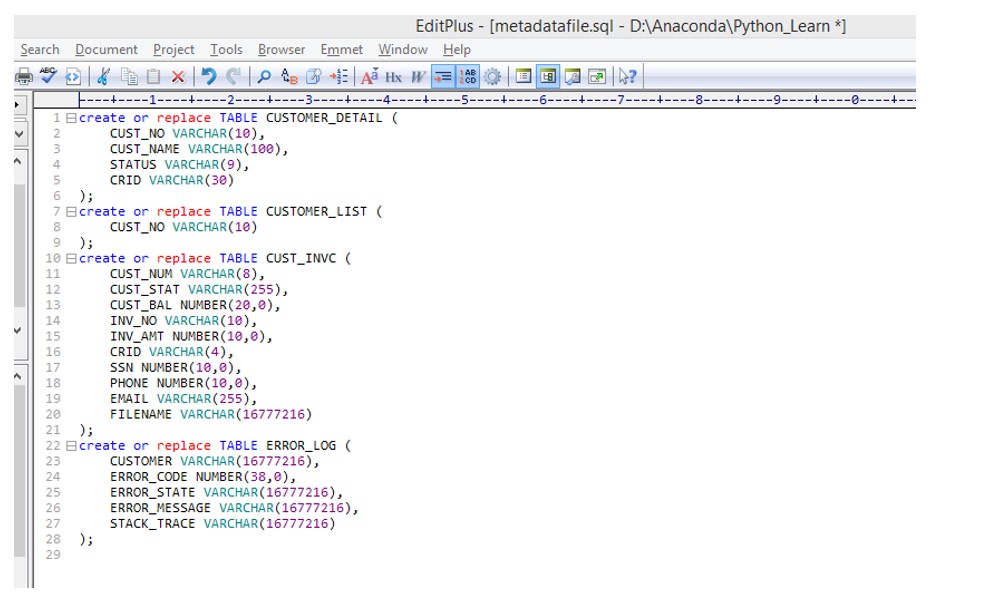

file : we are creating metadatafile.sql which will contain the DDL of all tables.

get_ddl_object: preapre the sql statement like

SELECT GET_DDL(‘TABLE’,'<<TABLENAME>>)

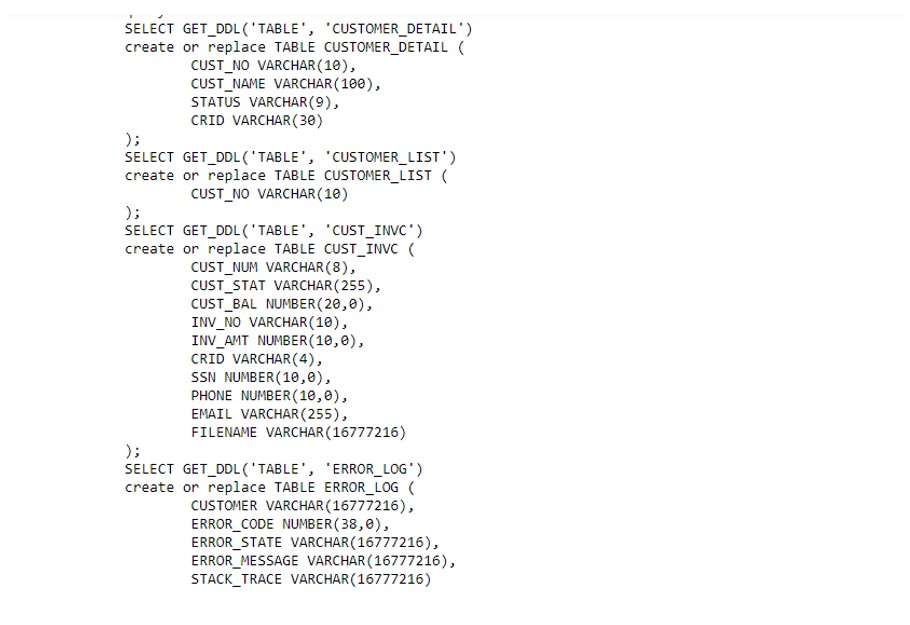

Output generated in below way:

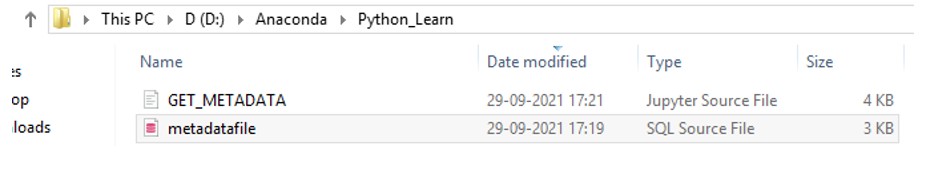

After execution of the Python code, Output File gets created in directory: