Many organizations leverage Snowflake stages for temporary data storage. However, with ongoing data ingestion and processing, it’s easy to lose track of stages containing old, potentially unnecessary data. This can lead to wasted storage costs. You want to implement a monitoring solution to track the storage usage of each internal stage and identify stages with stale data files.

This blog post will showcase a Snowflake stored procedure, calculate_stage_sizes(), that helps you identify stages with stale files and optimize storage usage.

Technical Implementation:

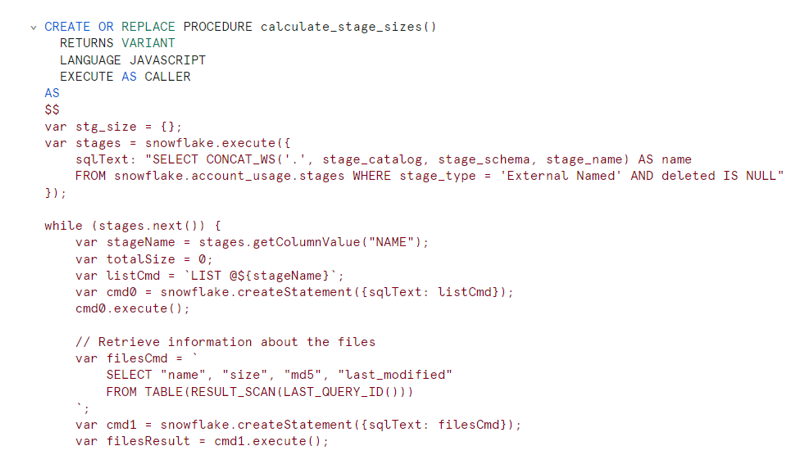

Below is the stored procedure we implemented to determine the size of individual files along with the total size in stages.

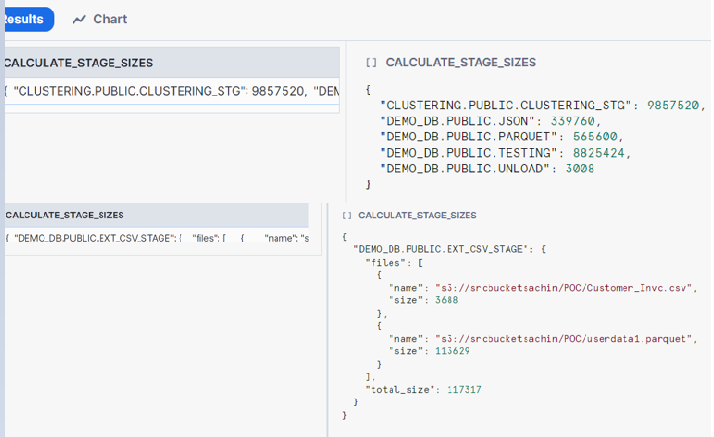

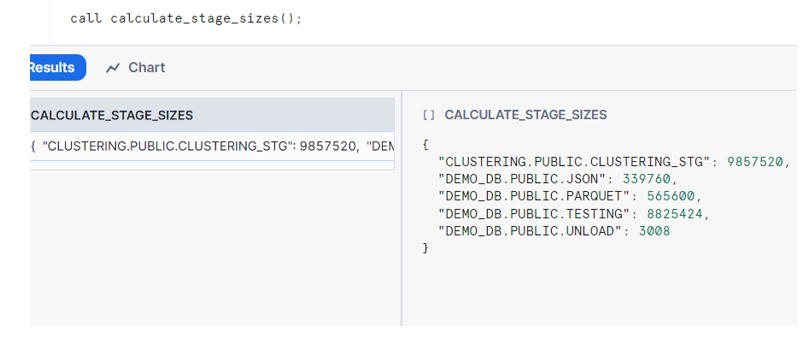

Call the procedure:

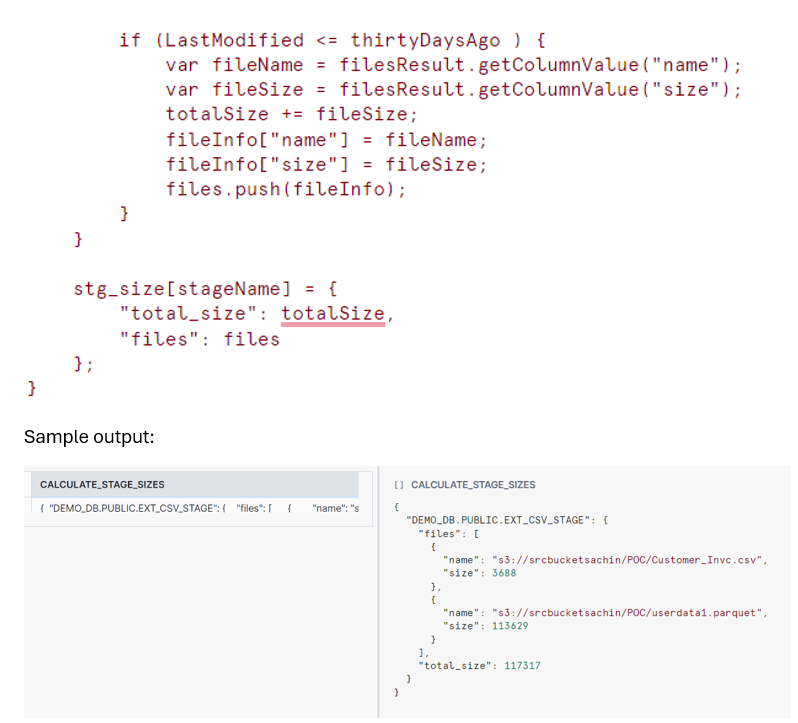

Moreover if you want the size of individual files in stage along with the total size, we can tweak the code in below way:

This stored procedure performs the following steps:

- Identifying Internal Named Stages: It retrieves a list of all internal named stages, which are user-created stages meant for temporary data storage.

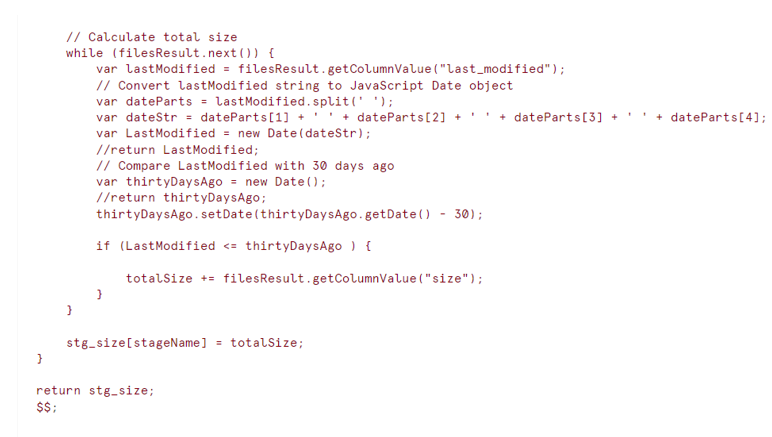

- Calculating Total Stage Size: For each stage, it iterates through the files stored within:

- It excludes deleted files.

- It retrieves the size of each file.

- It focuses on files not modified in the last 30 days (configurable) to identify potentially inactive stages.

- Building a Size Report: Finally, the procedure builds a report (stg_size) that maps stage names to their total sizes, focusing on stages containing data older than the specified timeframe.

Note: You can modify the logic within the procedure to adjust the timeframe for identifying potentially inactive files.

Benefit:

- You can utilize this procedure as a monitoring tool to analyze the storage utilization of external stages over time.

- Identify stale stages with potentially outdated data, allowing you to consider dropping or archiving them to save on storage costs.