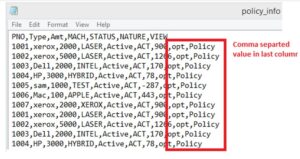

During this post we will talk about the scenario to ingest multiple CSV file into Snowflake. While the point to be noted here is that we don’t know the table structure of the files in advance. We need to create the table structure at run time and ingest the csv files accordingly.

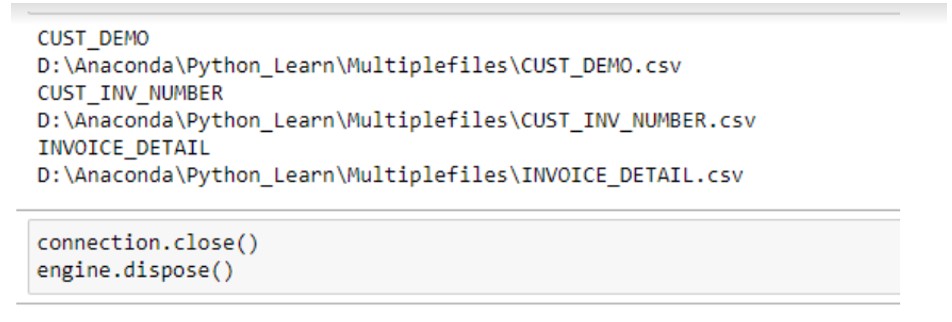

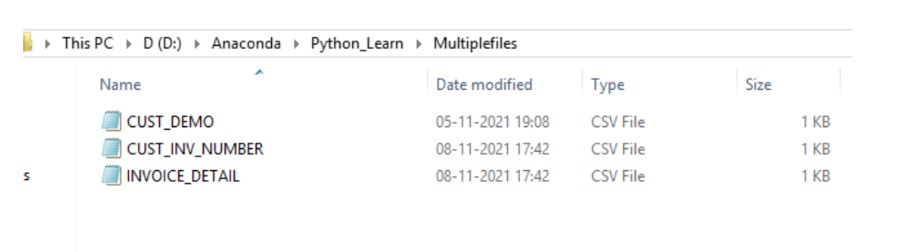

Consider the requirement we are getting multiple CSV files in one of the directory. Please note the structure of every file is different from each other i.e. one file can have 5 columns while other file can have 10 or more columns. In short the columns are varying across the files. As part of the requirement, we need to ingest these files into Snowflake in their respective tables. Copying the FILE yo Snowflake is straightforward task but the issue is we don’t know the structure of tables and hence first it is must we need to create the tables individually.

It would be possible to create the table manually if we talk about 3-4 files but in our case we are expecting 100 of files in the directory and it would be a very tedious job to create the 100 tables manually based on the file structure.

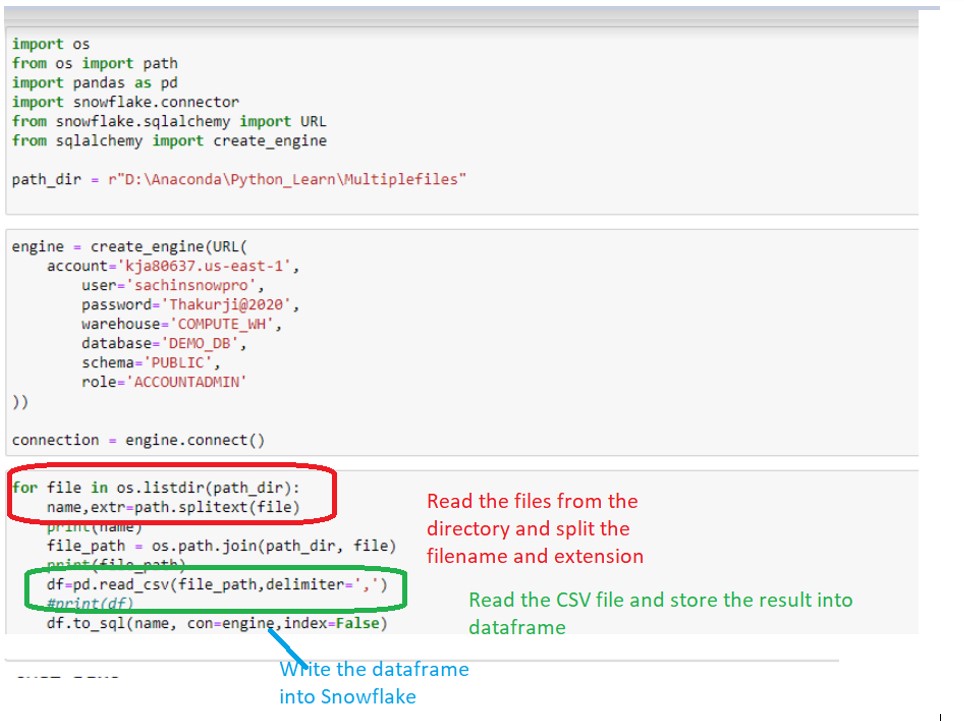

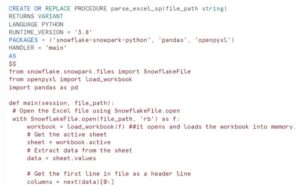

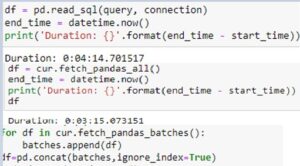

In order to automate this process we have opted the Python-Pandas path ,which will read the CSV file from the directory and store the data into respective Data frames. Later on we will connect with Snowflake and write the dataframe into snowflake as an individual table. This automated process will help us to read the CSV file and create and load the data into snowflake without manual intervention.

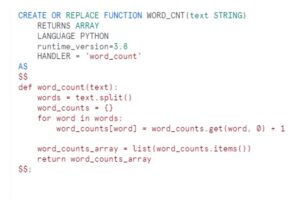

Please find the below code:

Directory Structure:

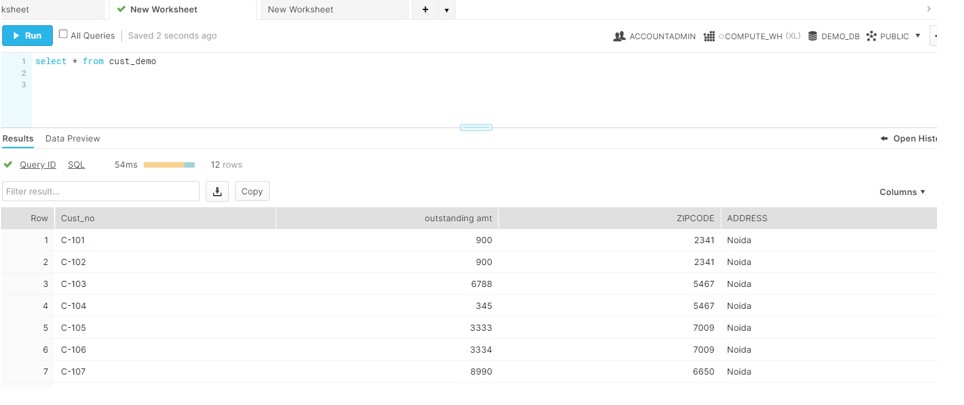

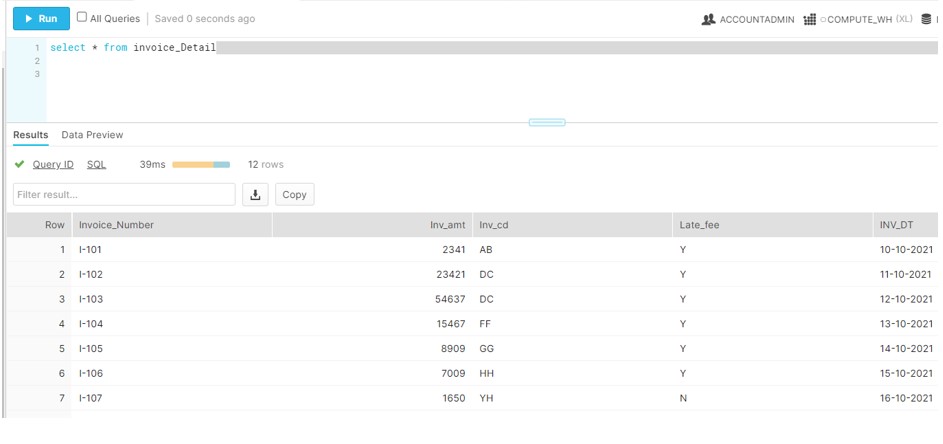

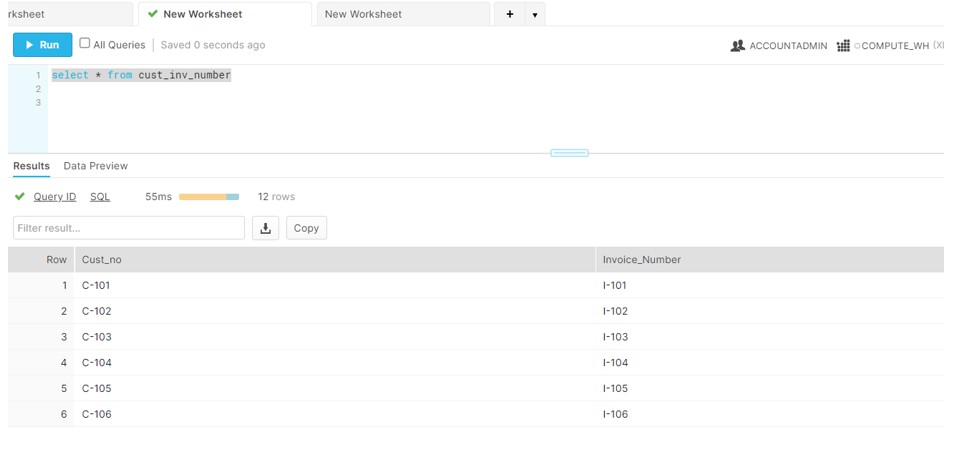

After the successful execution of the code ,following tables got created inside the snowflake:

So this way we have ingest multiple CSV file into Snowflake without manual intervention.

Sir, Will I get a git code reference of this article ?

I want to implement a similar solution. Also in your case are you creating all field as varchar datatype ?

If possible can we segregate fileds as varchar, number, timestamp while auto creating a table format?