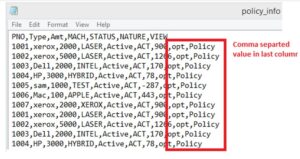

Extra comma in data:Recently came up a requirement where we need to upload the CSV files into the Snowflake table. CSV file contains the Supplier and Invoice data along with the Invoice Amount and Date. Though initially the requirement seems to be pretty straightforward . Moreover we can use the COPY command to load the data from file into table.

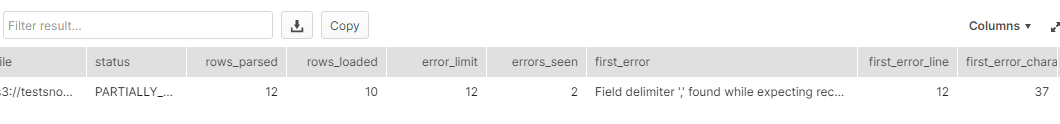

But during the data load , file got partially loaded, and few records got error out. After analysis the data in file, we found extra commas inside the data itself. Processing the file causing the below error.

ParserError: Error tokenizing data. C error: Expected 5 fields in line 12, saw 6.

SupplierName,InvoiceNumber,PartNumber,Cost,PurchaseDate

Supplier X,001-1001,2341,$500.00,1/20/14

Supplier X,001-1001,2341,$500.00,1/20/14

Supplier X,001-1001,5467,$750.00,1/20/14

Supplier X,001-1001,5467,$750.00,1/20/14

Supplier Y,50-9501,7009,$250.00,1/30/14

Supplier Y,50-9501,7009,$250.00,1/30/14

Supplier Y,50-9505,6650,$125.00,2/3/14

Supplier Y,50-9505,6650,$125.00,2/3/14

Supplier Z,920-4803,3321,$615.00,2/3/14

Supplier Z,920-4804,3321,$615.00,2/10/14

Supplier Z,920-4805,3321,$6,015.00,2/17/14

Supplier Z,920-4806,3321,$1,006,015.00,2/24/14

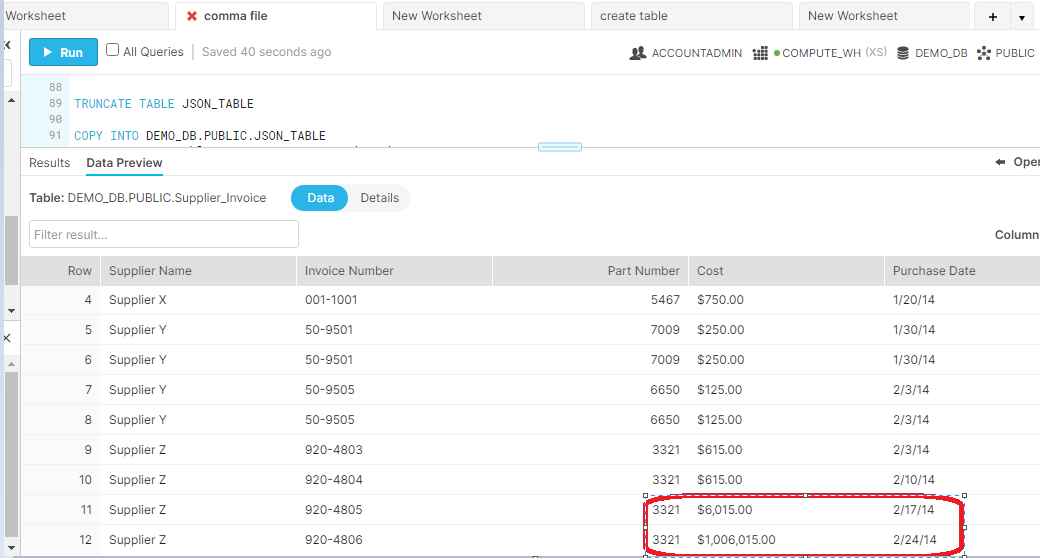

Please note the last 2 rows where Invoice amount column contains the extra “,” and this is the reason for failure.

Also one more issue was with Date format in the File and while loading the data inside the Snowflake it was throwing “Date is not recognized error”. To handle the the date format, we have specified date_format at the creation of FILE FORMAT.

create or replace file format demo_db.public.csv_format

type = csv

skip_header = 1

field_delimiter = ','

date_format = 'MM/DD/YYYY'

null_if = ('NULL', 'null')

empty_field_as_null = true;

create or replace stage demo_db.public.csv_stage

URL = 's3://testsnowflakepro/snowflake/csv/'

STORAGE_INTEGRATION = s3_int

file_format = demo_db.public.csv_format;

create or replace table supplier

(

Supplier varchar(100),Invoice varchar(50),Part VARCHAR(30),Cost varchar(50),Purchase_Date date

)

COPY INTO DEMO_DB.PUBLIC.supplier

FROM @DEMO_DB.public.csv_stage/supplier.csv

ON_ERROR = 'CONTINUE'

As we can see file got partially loaded as 2 records left unprocessed. In real time there were thousand of records which were left unprocessed because of the data (extra comma) issue. Hence our load would not be concluded as complete until all the records got processed. We have found another alternative to process the file.

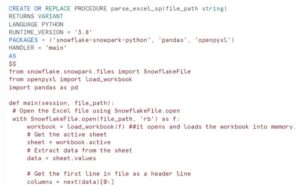

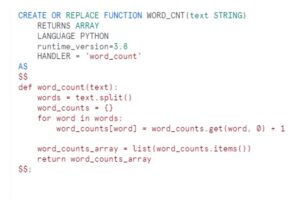

Python :

- Approach 1:

- Use of Python Pandas to read CSV file and process the file.

import os

import pandas as pd

import sqlalchemy

import snowflake.connector

from snowflake.sqlalchemy import URL

from sqlalchemy import create_engine

engine = create_engine(URL(

account='uma42255.us-xxxx-xxx',

user='xxxxxxx',

password='xxxxxxx',

warehouse='COMPUTE_WH',

database='DEMO_DB',

schema='PUBLIC',

role='ACCOUNTADMIN'

))

connection = engine.connect()

n=5

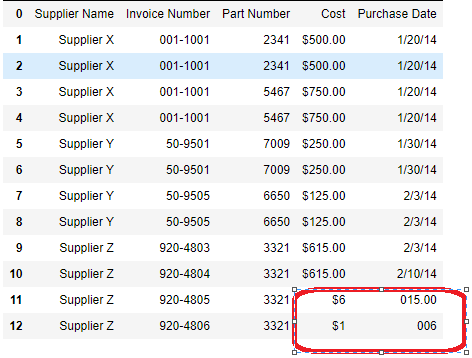

df = pd.read_csv('E:\Python\Python_Learn\Sachin\supplier.csv',usecols=range(n),header=None)

df

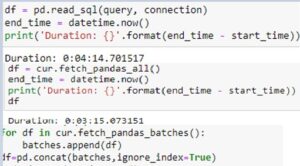

Note: Even though we are able to read the file successfully but the data for last 2 rows got corrupted, Dollar amount gets splitted into two columns and Date part got filtered out. So this approach would not be idle.

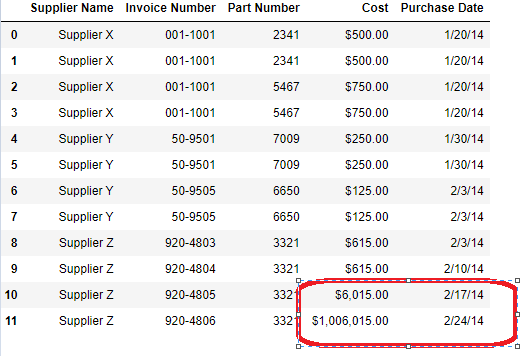

Python Approach 2:

- Modify the file and enclosed the COST column into double quotes like below

- Supplier Z,920-4805,3321,“$6,015.00”,2/17/14

Supplier Z,920-4806,3321,“$1,006,015.00”,2/24/14

- Supplier Z,920-4805,3321,“$6,015.00”,2/17/14

- Read the file in below way:

df = pd.read_csv(‘E:\Python\Python_Learn\Sachin\supplier.csv’,delimiter=’,’, quotechar='”‘)

df

df.to_sql(‘Supplier_Invoice’, con=engine, if_exists=’replace’,index=False)