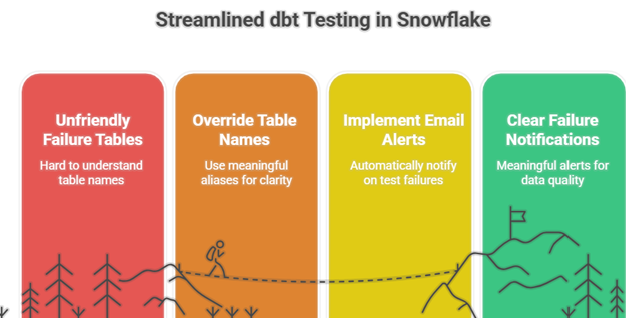

dbt Tests in Snowflake: Override Failure Tables + Email Alerts: Imagine you’re running a retail analytics pipeline on Snowflake. Every night, customer orders are loaded from multiple source systems into your Orders table. On one day, business users report that the dashboard is showing negative order amounts and orders dated in the future. Clearly, bad data has slipped through.

You already have dbt tests in place to catch these issues, But two challenges come up when you start using them in production with Snowflake:

- Ugly failure tables:

With store_failures: true, dbt persists failing rows, but names the table something like:Not exactly human-friendly. dbt_test__audit.accepted_values_orders_status__shipped__completed__pending - Silent failures:

Tests may fail quietly. Bad rows are logged, but nobody knows unless they manually query the audit schema or parse run logs.

Both problems make it harder to operationalize data quality in Snowflake.

The Goal

We built a framework around dbt + Snowflake that does two things:

- Override failure table names with meaningful aliases.

- Automatically send email alerts when tests fail — with no manual dependency.

Implementation:

Implementation:

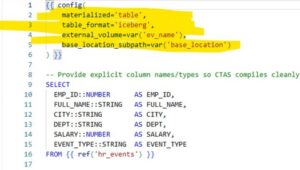

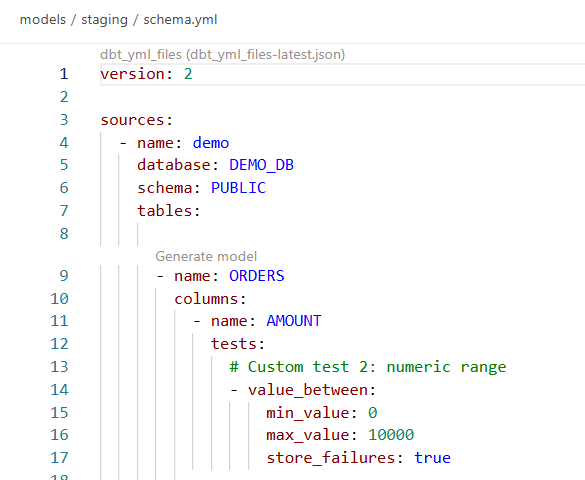

{% test value_between(model, column_name, min_value, max_value) %}

{{ config(

store_failures = true, -- persist bad rows

schema = 'AUDIT', -- central audit schema

alias = 'ORDERS_AMOUNT_RANGE_FAILS' -- readable failure table

) }}

select

order_id,

customer_id,

{{ column_name }} as invalid_amount,

current_timestamp as test_run_time

from {{ model }}

where {{ column_name }} < {{ min_value }}

or {{ column_name }} > {{ max_value }}

{% endtest %}

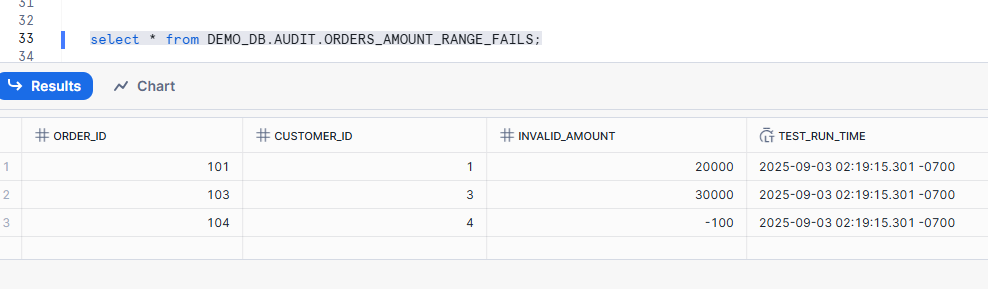

Now instead of an opaque table name, failures logs into: AUDIT.ORDERS_AMOUNT_RANGE_FAILS

This makes it much easier to monitor and trace.

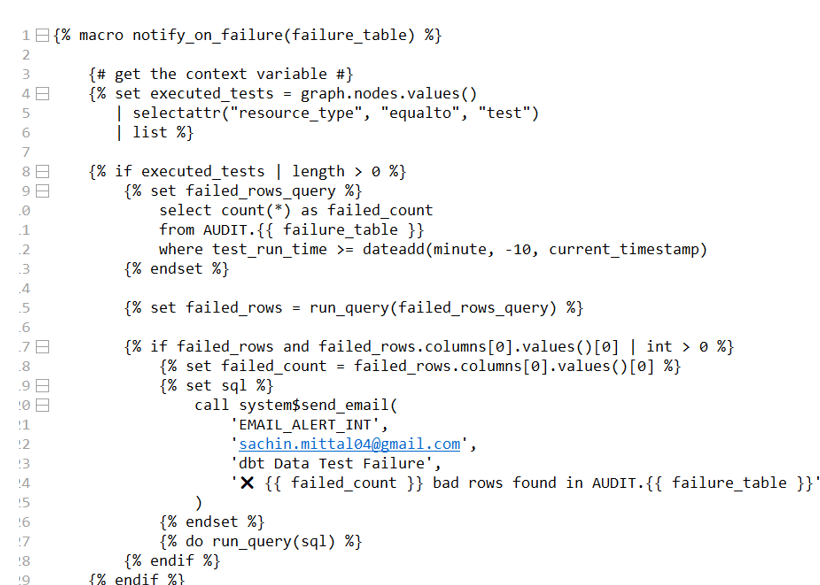

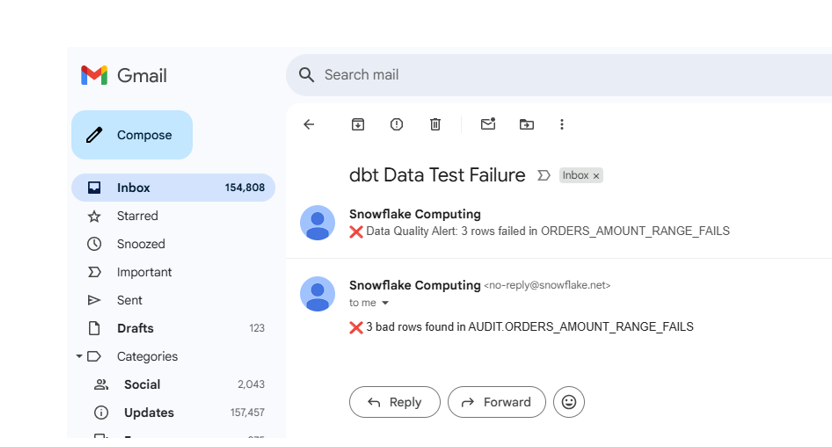

Step 2: Add a Smart Notification Macro

Next, we created a macro that:

- Checks if dbt tests were actually run.

- Queries the failure table for new rows in the last 10 minutes.

- If rows exist → sends an email using Snowflake’s SYSTEM$SEND_EMAIL.

Step 3: Hook into dbt Lifecycle

We attach this macro to the dbt lifecycle so it runs automatically at the end of a job:

# dbt_project.yml

on-run-end:

- "{{ notify_on_failure('ORDERS_AMOUNT_RANGE_FAILS') }}"

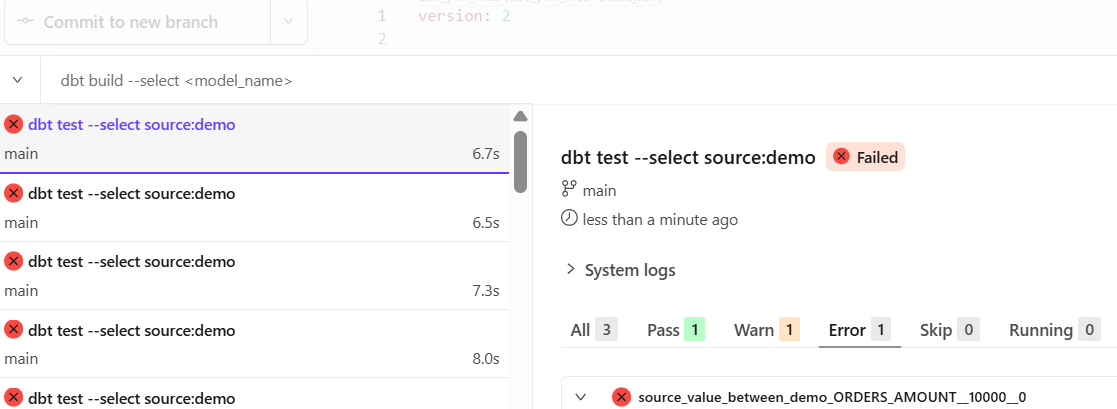

When we ran dbt test, the following happened:

- Failures were stored in AUDIT.ORDERS_AMOUNT_RANGE_FAILS.

- Macro checked the table and found 2 new rows.

- Snowflake sent an email:

Schema.yml:

Run the test against the source:

Why This Matters

With just a few lines of dbt + Snowflake config, we solved two real-world issues:

- Readable failure tables → easier root-cause analysis.

- Real-time notifications → no more silent failures.

This approach turns dbt tests into an actionable data quality framework inside Snowflake.